Getting Started with Apache Spark and Arctic

Dremio Arctic is a data lakehouse management service that enables you to manage your data as code with Git-like operations and automatically optimizes your data for performance. Arctic provides a catalog for Apache Iceberg tables in your data lakehouse, and leveraging the open source Project Nessie, allows you to use Git-like operations for isolation, version control, and rollback of your dataset. These operations make it easy and efficient to experiment with, update, and audit your data with no data copies. You can use an Arctic catalog with different data lakehouse engines, including Dremio Sonar for lightning fast queries, Apache Spark for data processing, and Apache Flink for streaming data. For more information on Arctic, see Dremio Arctic.

Goal

This tutorial gets you started with the basics of Arctic, using Arctic as the catalog to manage data as code. Additionally, you'll see the integration that Arctic has with Apache Spark, as we'll use Spark as the engine to work with sample tables. As a part of this tutorial, you will set up your existing Spark environment, get the proper credentials in order to connect Spark with Arctic, and join data from the sample tables to create a new table.

After completing this tutorial, you'll know how to:

- Create a new development branch where you can make updates in isolation from the

mainbranch. - Work with the tables in your development branch to create a new table.

- Atomically merge your changes into the

mainbranch. - Validate the merge.

Prerequisites

Before you can experience Arctic in Spark, you'll need to have a Spark environment available. Additionally, you'll need to:

- Verify that you have an account in an existing Dremio organization

- Generate a personal access token

- Set up the Spark environment

- Create tables with sample data

Verify That You Have an Account in an Existing Dremio Organization

Before you begin, you need an active account within an existing Dremio organization. If you do not have a Dremio account yet, either check with your administrator to be added to one, if available, or sign up for Dremio Cloud.

Generate a Personal Access Token

Generate a new personal access token (PAT). A PAT provides an easy way for you to connect to Dremio Cloud through the JDBC interface. For information on how to generate one, see Personal Access Tokens.

Set Up the Spark Environment

For this tutorial, we'll set up your existing Spark environment with the necessary environmental variables to connect Spark with Arctic.

- Provide your AWS credentials so that you can connect with the Amazon S3 bucket where your tables reside in. For the following command lines, replace

<AWS_SECRET_ACCESS_KEY>,<AWS_ACCESS_KEY_ID>, and<AWS_REGION>with your credentials and then run them.

export AWS_SECRET_ACCESS_KEY=<AWS_SECRET_ACCESS_KEY>

export AWS_ACCESS_KEY_ID=<AWS_ACCESS_KEY_ID>

export AWS_REGION=<AWS_REGION>

- To connect Apache Spark to Arctic, you need to set the following properties as you initialize Spark SQL:

<ARCTIC_URI>,<TOKEN>, and<WAREHOUSE>.

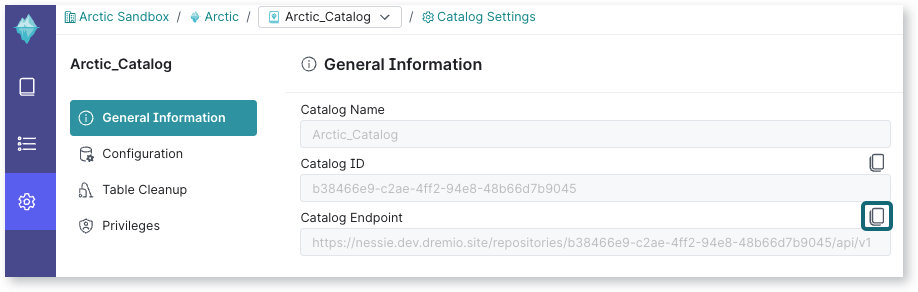

- ARCTIC_URI: The endpoint for your Arctic catalog. To get this endpoint, you will need to log in to your Dremio account, access the Arctic catalog, and then access the Project Settings

icon as shown in the following image. Copy the Catalog Endpoint, which you'll use as the Arctic_URI.

icon as shown in the following image. Copy the Catalog Endpoint, which you'll use as the Arctic_URI.

-

BEARER: Set the authentication type to

BEARER. -

TOKEN: This is your PAT that you generated in Dremio to authorize Spark to read your Arctic catalog.

-

WAREHOUSE: To write to your catalog, you must specify the location where the files are stored. The URI needs to include the trailing slash. For example,

Example initializations3s://my-bucket-name/path/spark-sql --packages org.apache.iceberg:iceberg-spark-runtime-3.2_2.12:0.14.1,org.projectnessie:nessie-spark-extensions:0.44.0,software.amazon.awssdk:bundle:2.17.178,software.amazon.awssdk:url-connection-client:2.17.178 \

--conf spark.sql.extensions="org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions,org.projectnessie.spark.extensions.NessieSpark32SessionExtensions" \

--conf spark.sql.catalog.arctic.uri=https://nessie.dremio.cloud/repositories/52e5d5db-f48d-4878-b429-815ge9fdw4c6/api/v1 \

--conf spark.sql.catalog.arctic.ref=main \

--conf spark.sql.catalog.arctic.authentication.type=BEARER \

--conf spark.sql.catalog.arctic.catalog-impl=org.apache.iceberg.nessie.NessieCatalog \

--conf spark.sql.catalog.arctic.io-impl=org.apache.iceberg.aws.s3.S3FileIO \

--conf spark.sql.catalog.arctic=org.apache.iceberg.spark.SparkCatalog \

--conf spark.sql.catalog.arctic.authentication.token=RDViJJHrS/u+JAwrzQVV2+kAuLxiNkbTgdWQKQhAUS72o2BMKuRWDnjuPEjACw== \

--conf spark.sql.catalog.arctic.warehouse=s3://arctic_test_bucket

Create Tables with Sample Data

First, you'll create two tables containing sample data of a fictitious business:

- Table 1: employee information (employee ID, name, manager employee ID, employee department ID, and the year joined.

- Table 2: department information (department ID and department name)

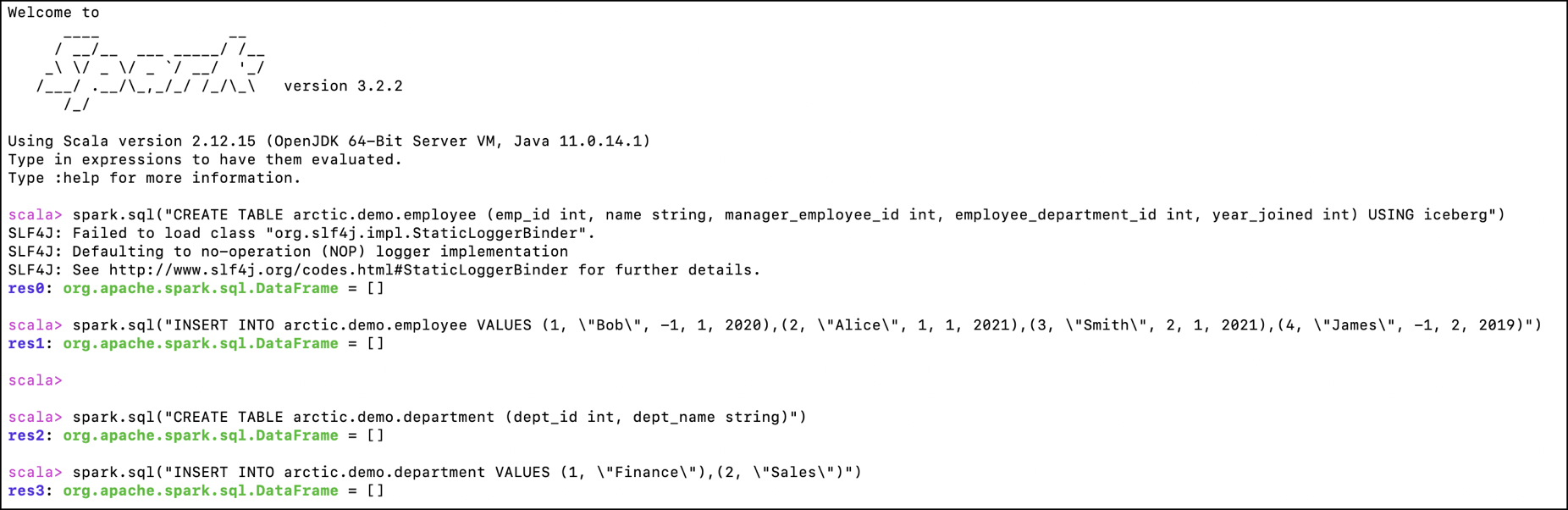

Run the following command from your Spark SQL prompt to create the first table:

Creating the First Tablespark.sql("CREATE TABLE arctic.demo.employee (emp_id int, name string, manager_employee_id int, employee_department_id int, year_joined int) USING iceberg")

spark.sql("INSERT INTO arctic.demo.employee VALUES (1, \"Bob\", -1, 1, 2020),(2, \"Alice\", 1, 1, 2021),(3, \"Smith\", 2, 1, 2021),(4, \"James\", -1, 2, 2019)")

Then run the following commands to create the second table:

Creating the Second Tablespark.sql("CREATE TABLE arctic.demo.department (dept_id int, dept_name string)")

spark.sql("INSERT INTO arctic.demo.department VALUES (1, \"Finance\"),(2, \"Sales\")")

When the tables are created, you will see a confirmation message: org.apache.spark.sql.DataFrame = [], as shown in the following image:

Now that you have set up the Spark environment and have the sample tables ready, you're ready to use Spark with Arctic!

- Create a new branch of the tables.

- Create a new table in the branch.

- Merge your changes into the

mainbranch. - Validate the merge.

Step 1. Create a New Branch of the Tables

In this step, you'll create a branch in your catalog to isolate changes from the main branch. Similar to Git’s version control semantics for code, a branch in Arctic represents an independent line of development isolated from the main branch. Arctic does this with no data copy, and enables you to update, insert, join, and delete data in tables on new branches without impacting the original ones in the main branch. When you are ready to update the main branch from changes in a secondary branch, you can merge them (Step 3). For more information on branches and Arctic’s Git-like operations, see Git-like Data Management.

Run the following command to create a secondary (demo_etl) branch:

spark.sql("CREATE BRANCH IF NOT EXISTS demo_etl IN arctic")

Then switch to the demo_etl branch:

spark.sql("USE REFERENCE demo_etl IN arctic")

When the branch is created, you will receive a confirmation message:

Confirmation Messageorg.apache.spark.sql.DataFrame = [refType: string, name: string … 1 more field]

Step 2. Create a New Table in the Branch

Now you'll create a new table that will store the results of the employee's department ID where it matches the department ID. In this scenario, you are aligning employees to the department they belong to by matching those IDs. For example, HR may want to perform this task to update their employee records.

Run the following command to create the new table:

Creating a New Tablespark.sql("CREATE TABLE arctic.demo.employee_info AS select * from arctic.demo.employee emp, arctic.demo.department dept where emp.employee_department_id == dept.dept_id")

When the table is created, you will receive a confirmation message:

Confirmation Messageorg.apache.spark.sql.DataFrame = []

Step 3. Merge Your Changes Into the Main Branch

Now that you've inserted new data and validated the changes in the isolated demo_etl branch, you can safely merge these changes into the main branch. The merge updates the main branch. In this case, the merge will add the new table that was created in the demo_etl branch into the main branch. In a production scenario, this would be the process to update the main branch that is consumed by production workloads.

Run the following command to merge the changes to the main branch:

spark.sql("MERGE BRANCH demo_etl INTO main IN arctic")

Optionally, if you have completed all your work on the demo_etl branch and don't see it serving any additional purpose, you can drop it as a housekeeping practice. To drop a branch you no longer need, use the following command:

spark.sql("DROP BRANCH demo_etl IN arctic")

When the merge is completed, you will receive a confirmation message:

Confirmation Messageorg.apache.spark.sql.DataFrame = [name: string, hash: string]

Step 4. Validate the Merge

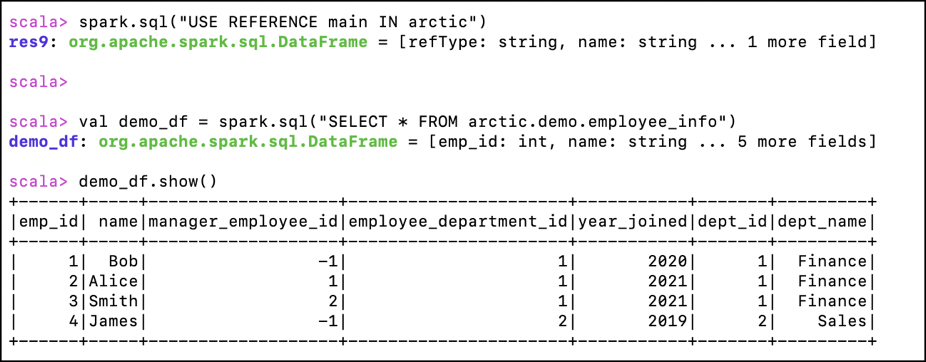

Finally, validate that the new table you created in the demo_etl branch is now merged into the main branch.

Run the following command to switch to the main branch:

spark.sql("USE REFERENCE main IN arctic")

Then run the following command to check that the new table is in the main branch:

val demo_df = spark.sql("SELECT * FROM arctic.demo.employee_info")

demo_df.show()

You will see the following output.

Wrap-Up and Next Steps

Congratulations! You have successfully completed this tutorial and can work with Arctic catalogs in a Spark environment, create tables in a catalog, update tables in a branch, and merge a development branch back into the main branch.

To learn more about Dremio Arctic, see: