Optimizing Tables

Dremio integrates support for Apache Iceberg tables, an open-source data lakehouse table format designed for petabyte-scale tables. Iceberg tables bring the reliability and simplicity of SQL tables to the data lakehouse. Iceberg tables also function in a fully-open and accessible manner that allows multiple engines (such as Dremio, Spark, etc.) to operate on the same dataset. As these tables are being written to and updated, the number of data files and metadata files accumulates. This accumulation of files can impact query performance, and to remedy this impact, Dremio suggests running OPTIMIZE TABLE as part of your table management workflow.

Dremio enables you to optimize query performance to maximize the speed and efficiency with which data is retrieved. Performance optimization occurs by running SQL statements to optimize Iceberg tables. These SQL statements can be run for all Iceberg tables and catalogs in Dremio.

How Dremio Optimizes a Table

Dremio enables you to rewrite data files (via compaction) using the optimize functionality, which combines small files or splits large files into an optimal file size to reduce metadata overhead and runtime file open costs. This functionality also enables you to repartition the data when a table's partition has changed.

You may need to optimize Iceberg tables in Dremio due to sub-optimal file sizes and an evolving partition scheme. The optimize functionality supports the bin_pack clause, enabling users to bin pack files in partitions they actively write to.

Sub-optimal File Sizes

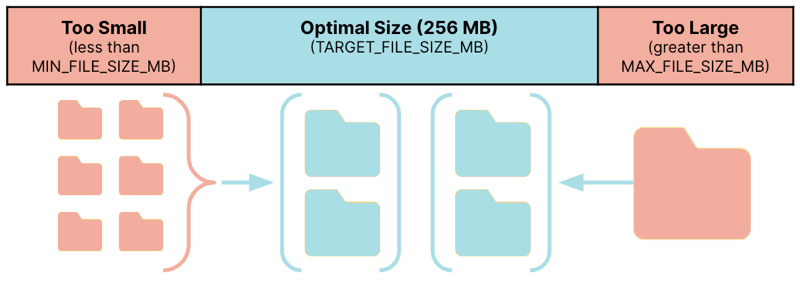

Iceberg tables that are constantly being updated can have data files of various sizes. As a result, query performance can be negatively affected by sub-optimal file sizes. The optimal file size in Dremio is 256 MB. The optimize functionality logically combines smaller files and splits larger ones to 256 MB (see the following graphic), helping to reduce metadata overhead and costs related to opening and reading files.

Clustered Iceberg Tables

The OPTIMIZE TABLE command should be used to optimize Iceberg tables. However, its behavior differs based on whether or not tables are clustered.

For clustered tables, OPTIMIZE TABLE incrementally reorders data to achieve the optimal data layout and manages file sizes. This mechanism may take longer to run on newly loaded or unsorted tables. Additionally, you may be required to run multiple OPTIMIZE TABLE commands to converge on an optimal file layout.

For unclustered tables, OPTIMIZE TABLE combines small files or splits large files to achieve an optimal file size, reducing metadata overhead and runtime file open costs.

We recommend enabling automated table maintenance to eliminate the need to run optimizations for clustered Iceberg tables manually. For more details, see Automatic Optimization.

Evolving Partition Scheme

To improve read or write performance, you can partition data based on the values of a table's columns. If the columns used in a partition evolve over time, query performance can be impacted when the queries are not aligned with the current segregations of the partition. Optimizing a table identifies and rewrites the data files if they do not follow the most recent partition specification.

You can optimize selected partitions using the partition filter clause. Use this SQL command:

- When select partitions are queried more often or are of more importance (than others) and it's not necessary to optimize the entire table.

- When select partitions are more active and are constantly being updated, and optimization should only occur when activity is low or paused.

Rewriting Manifest Files

Iceberg uses metadata files (or manifests) to track point-in-time snapshots by maintaining all deltas as a table. This metadata layer functions as an index over a table’s data and the manifest files contained in this layer speed up query planning and prune unnecessary data files. For Iceberg tables that are constantly being updated (such as the ingestion of streaming data or users performing frequent DML operations), the number of manifest files that are sub-optimal in size can grow over time. Additionally, the clustering of metadata entries in these files may not be optimal. As a result, sub-optimal manifests can impact the time it takes to plan and execute a query.

Dremio provides the capability for you to rewrite these manifest files based on a size criteria. Such rewrites occur quickly and use minimal resources since it only affects the manifest files. This operation results in the optimization of the metadata, helping to reduce query planning time.

The target size for a manifest file is based on the Iceberg table's property. If a default size is not set, Dremio defaults to 8 MB. For the target size, Dremio considers the range from 0.75x to 1.8x, inclusive, to be optimal. Manifest files exceeding the 1.8x size will be split while files smaller than the 0.75x size will be compacted.

Optimizing Tables with Position Delete Files

Iceberg v2 added the ability for delete files to be encoded to rows that have been deleted in existing data files. This enables you to delete or replace individual rows in immutable data files without the need to rewrite those files. Position delete files identify deleted rows by file and position in one or more data files, as shown in the following example.

file_path | pos |

|---|---|

file:/Users/test.user/Downloads/gen_tables/orders_with_deletes/data/2021/2021-00.parquet | 6 |

file:/Users/test.user/Downloads/gen_tables/orders_with_deletes/data/2021/2021-00.parquet | 16 |

Dremio can optimize Iceberg tables containing position delete files. This is beneficial to do because when data files are read, the associated delete files are stored in memory. Also, one data file can be linked to several delete files, which can impact read time.

When you optimize a table in Dremio, the position delete files are removed and the data files that are linked to them are rewritten. Data files are rewritten if any of the following conditions are met:

- The file size is not within the optimum range.

- The partition's specification is not current.

- The data file has an attached delete file.

When optimizing a table using the MIN_INPUT_FILES option, consider the minimum number of qualified files needed for compaction. Delete files count towards determining whether the minimum threshold is reached.

You only need to optimize an Iceberg table once to remove the delete files. Dremio does not write delete files, even when performing DML operations. As a result, optimizing an Iceberg table containing delete files just once can improve read performance.

For guidance on using the optimize functionality in your queries, see OPTIMIZE TABLE.

Routing Optimization Jobs

You can route jobs that run OPTIMIZE TABLE to specific engines by using a routing rule that uses the query_label() condition. For more information, see Engine Routing.

Limitations

- You can run only one optimize query at a time on the selected Iceberg table partition.

- The optimize functionality does not support sort ordering.