Best Practices for Creating Raw and Aggregation Reflections

Dremio recommends that you follow these best practices when creating Reflections.

Design Reflections for expensive query patterns

- Review query history (jobs) to determine the most expensive and most-frequent queries being submitted.

- Look in the job profiles for these queries. Tables and views referenced by multiple queries that perform expensive scans, joins, and aggregations are good candidates for Reflections.

- Examine the SQL for the selected queries that reference the same table or view to find patterns that can help you define a Reflection on that table or view that satisfies as many of those queries as possible.

Avoid the “more are always better” approach

Creating more Reflections than are necessary to support your data consumers can lead to the use of more resources than might be optimal for your environment, both in terms of system resources and the time and attention devoted to working with them.

Establish criteria for when to create reflections

Create them only when data consumers are experiencing slow query responses, or when reports are not meeting established SLAs.

Create Reflections where they do the most work without duplicating the work of other reflections

You can do so by following the recommendation in the section Layering Datasets in Datasets.

Establish a routine for checking how often Reflections are used by the query planner

At regular intervals, check for Reflections that are no longer being used and evaluate whether they should be removed. Query patterns can change over time, and frequently-used Reflections can gradually become less relevant.

Use Supporting Anchors

Anchors for Reflections are views that data consumers have access to from their business-intelligence tools. As you develop a better understanding of query patterns, you might want to support those patterns by creating Reflections from views that perform expensive joins, transformations, filters, calculations, or a combination of those operations. You would probably not want data consumers to be able to access those views directly in situations where the query optimizer did not use any of the Reflections created from those views. Repeated and concurrent queries on such views could put severe strain on system resources.

You can prevent queries run by data consumers from accessing those views directly. Anchors that perform expensive operations and to which access is restricted are called supporting anchors.

For example, suppose that you find these three, very large tables are used in many queries:

- Customer

- Order

- Lineitem

You determine that there are a few common patterns in the user queries on these tables:

- The queries frequently join the three tables together.

- Queries always filter by

commit_date < ship_date - There is a calculated column in most of the queries:

extended_price * (1-discount) AS revenue

You can create a view that applies these common patterns, and then create a raw Reflection to accelerate queries that follow these patterns.

First, you create a folder in the catalog that your data consumers have access to. Then, you configure this folder to be invisible and inaccessible to the data consumers.

Next, you write the query to create the view, you follow these guidelines:

- Use

SELECT *to include all columns, making it possible for the query optimizer to accelerate the broadest set of queries. Alternatively, if you know exactly which subset of columns are used in the three tables, you can include just that subset in the view. - Add any calculated columns, which in this case is the revenue column.

- Apply the appropriate join on the three tables.

- Apply any filters that are used by all queries, which in this case is only

commit_date < ship_date. - Always use the most generic predicate possible to maximize the number of queries that will match.

Next, you run the following query to create a new view:

Create a new viewSELECT *,

extendedprice * (1 - discount) AS revenue

FROM customer AS c,

orders AS o,

lineitem AS l

WHERE c.c_custkey = o.o_custkey AND

l.l_orderkey = o.o_orderkey AND

o.commit_date < o.ship_date;

Then, you save the view in the folder that you created earlier.

Finally, you create one or more raw Reflections on this new supporting anchor. If most of the queries against the view were aggregation queries, you could create an aggregation Reflection. In both cases, you can select columns, as needed, to sort on or partition on.

The result is that, even though the data consumers do not have access to the supporting anchor, Dremio can accelerate their queries by using the new Reflections as long as they have access to the tables that the Reflections are ultimately derived from: Customer, Order, and Lineitem.

If the query optimizer should determine that a query cannot be satisfied by any of the Reflections, it is possible, if no other views can satisfy it, for the query to run directly against the tables, as is always the case with any query.

Horizontally Partition Reflections that Have Many Rows

If you select a column for partitioning in a data Reflection, Dremio physically groups records together into a common directory on the file system. For example, if you partition by the column Country, in which the values are two-letter abbreviations for the names of countries, such as US, UK, DE, and CA, Dremio stores the data for each country in a separate directory named US, UK, DE, CA, and so on. This optimization allows Dremio to scan a subset of the directories based on the query, which is an optimization called partition pruning.

If a user queries on records for which the value of Country is US or UK, then Dremio can apply partition pruning to scan only the US and UK directories, significantly reducing the amount of data that is scanned for the query.

When you are selecting a partitioning column for a data Reflection, ask yourself these questions:

- Is the column used in many queries?

- Are there relatively few unique values in the column (low cardinality)?

To partition the data, Dremio must first sort all records, which consumes resources. Accordingly, partition data only on columns that can be used to optimize queries. In addition, the number of unique values for a column should be relatively small, so that Dremio creates only a relatively small number of partitions. If all values in a column are unique, the cost to partition outweighs the benefit.

In general, Dremio recommends the total number of partitions for a Reflection to be less than 10,000.

Because Reflections are created as Apache Iceberg tables, you can use partition transforms to specify transformations to apply to partition columns to produce partition values. For example, if you choose to partition on a column of timestamps, you can set partition transforms that produce partition values that are the years, months, days, or hours in those timestamps. The following table lists the partition transforms that you can choose from.

- If a column is listed as a partition column, it cannot also be listed as a sort column for the same Reflection.

- In aggregation Reflections, each column specified as a partition column or used in transform must also be listed as a dimension column.

- In raw Reflections, each column specified as a partition column or used in transform must also be listed as a display column.

| Value | Type of Partition Transform | Description |

|---|---|---|

| IDENTITY | identity(<column_name>) | Creates one partition per value. This is the default transform. If no transform is specified for a column named by the name property, an IDENTITY transform is performed. The column can use any supported data type. |

| YEAR | year(<column_name>) | Partitions by year. The column must use the DATE or TIMESTAMP data type. |

| MONTH | month(<column_name>) | Partitions by month. The column must use the DATE or TIMESTAMP data type. |

| DAY | day(<column_name>) | Partitions on the equivalent of dateint. The column must use the DATE or TIMESTAMP data type. |

| HOUR | hour(<column_name>) | Partitions on the equivalent of dateint and hour. The column must use the TIMESTAMP data type. |

| BUCKET | bucket(<integer>, <column_name>) | Partitions data into the number of partitions specified by an integer. For example, if the integer value N is specified, the data is partitioned into N, or (0 to (N-1)), partitions. The partition in which an individual row is stored is determined by hashing the column value and then calculating <hash_value> mod N. If the result is 0, the row is placed in partition 0; if the result is 1, the row is placed in partition 1; and so on.The column can use the DECIMAL, INT, BIGINT, VARCHAR, VARBINARY, DATE, or TIMESTAMP data type. |

| TRUNCATE | truncate(<integer>, <column_name>) | If the specified column uses the string data type, truncates strings to a maximum of the number of characters specified by an integer. For example, suppose the specified transform is truncate(1, stateUS). A value of CA is truncated to C, and the row is placed in partition C. A value of CO is also truncated to C, and the row is also placed in partition C.If the specified column uses the integer or long data type, truncates column values in the following way: For any truncate(L, col), truncates the column value to the biggest multiple of L that is smaller than the column value. For example, suppose the specified transform is truncate(10, intColumn). A value of 1 is truncated to 0 and the row is placed in the partition 0. A value of 247 is truncated to 240 and the row is placed in partition 240. If the transform is truncate(3, intColumn), a value of 13 is truncated to 12 and the row is placed in partition 12. A value of 255 is not truncated, because it is divisble by 3, and the row is placed in partition 255.The column can use the DECIMAL, INT, BIGINT, VARCHAR, or VARBINARY data type. Note: The truncate transform does not change column values. It uses column values to calculate the correct partitions in which to place rows. |

Partition Reflections to Allow for Partition-Based Incremental Refreshes

Incremental refreshes of data in Reflections are much faster than full refreshes. Partition-based incremental refreshes are based on Iceberg metadata that is used to identify modified partitions and to restrict the scope of the refresh to only those partitions. To learn more about the refresh types available for Apache Iceberg tables and the algorithm that determines when to apply each type, see Types of Refresh for Reflections on Apache Iceberg Tables in Refreshing Reflections.

For partition-based incremental refreshes, both the base table and its Reflections must be partitioned, and the partition transforms that they use must be compatible. The following table lists which partition transforms on the base table and which partition transforms on Reflections are compatible:

| Partition Transform on the Base Table | Compatible Partition Transforms on Reflections |

|---|---|

| Identity | Identity, Hour, Day, Month, Year, Truncate |

| Hour | Hour, Day, Month, Year |

| Day | Day, Month, Year |

| Month | Month, Year |

| Year | Year |

| Truncate | Truncate |

- If both a base table and a Reflection use the Truncate partition transform, follow these rules concerning truncation lengths:

- If the partition column uses the String data type, the truncation length used for the Reflection must be less than or equal to the truncation length used for the base table.

- If the partition column uses the Integer data type, the remainder from the truncation length on the Reflection (A) divided by the truncation length on the base table (B) must be equal to 0:

A MOD B = 0 - If the partition column uses any other data type, the truncation lengths must be identical.

- If a base table uses the Bucket partition transform, partition-based incremental refreshes are not possible.

Partitioning Aggregation Reflections on Timestamp Data in Very Large Base Tables

Suppose you want to define an aggregation Reflection on a base table that has billions of rows. The base table includes a column that either uses the TIMESTAMP data type or includes a timestamp as a string, and the base table is partitioned on that column.

In your aggregation Reflection, you plan to aggregate on timestamp data that is in the base table. However, to get the benefits of partition-based incremental refresh, you need to partition the Reflection in a way that is compatible with the partitioning on the base table. You can make the partitioning compatible in either of two ways:

- By defining a view on the base table, and then defining the aggregation Reflection on that view

- By using the advanced Reflection editor to define the aggregation Reflection on the base table

Defining an Aggregation Reflection on a View

If the timestamp column in the base table uses the TIMESTAMP data type, use one of the functions in this table to define the corresponding column in the view. You can partition the aggregation Reflection on the view column and use the partition transform that corresponds to the function.

| Function in View Definition | Corresponding Partition Transform |

|---|---|

| DATE_TRUNC('HOUR', <base_table_column>) | HOUR(<view_col>) |

| DATE_TRUNC('DAY', <base_table_column>) | DAY(<view_col>) |

| DATE_TRUNC('MONTH', <base_table_column>) | MONTH(<view_col>) |

| DATE_TRUNC('YEAR', <base_table_column>) | YEAR(<view_col>) |

| CAST <base_table_column> as DATE | DAY(<view_col>) |

| TO_DATE(<base_table_column>) | DAY(<view_col>) |

If the timestamp column in the base table uses the STRING data type, use one of the functions in this table to define the corresponding column in the view. You can partition the aggregation Reflection on the view column and use the partition transform that corresponds to the function.

| Function in View Definition | Corresponding Partition Transform |

|---|---|

| LEFT(<base_table_column>, X) | TRUNCATE(<view_col>, X) |

| SUBSTR(<base_table_column>, 0, X) | TRUNCATE(<view_col>, X) |

| SUBSTRING(<base_table_column>, 0, X) | TRUNCATE(<view_col>, X) |

Defining an Aggregation Reflection on a Base Table

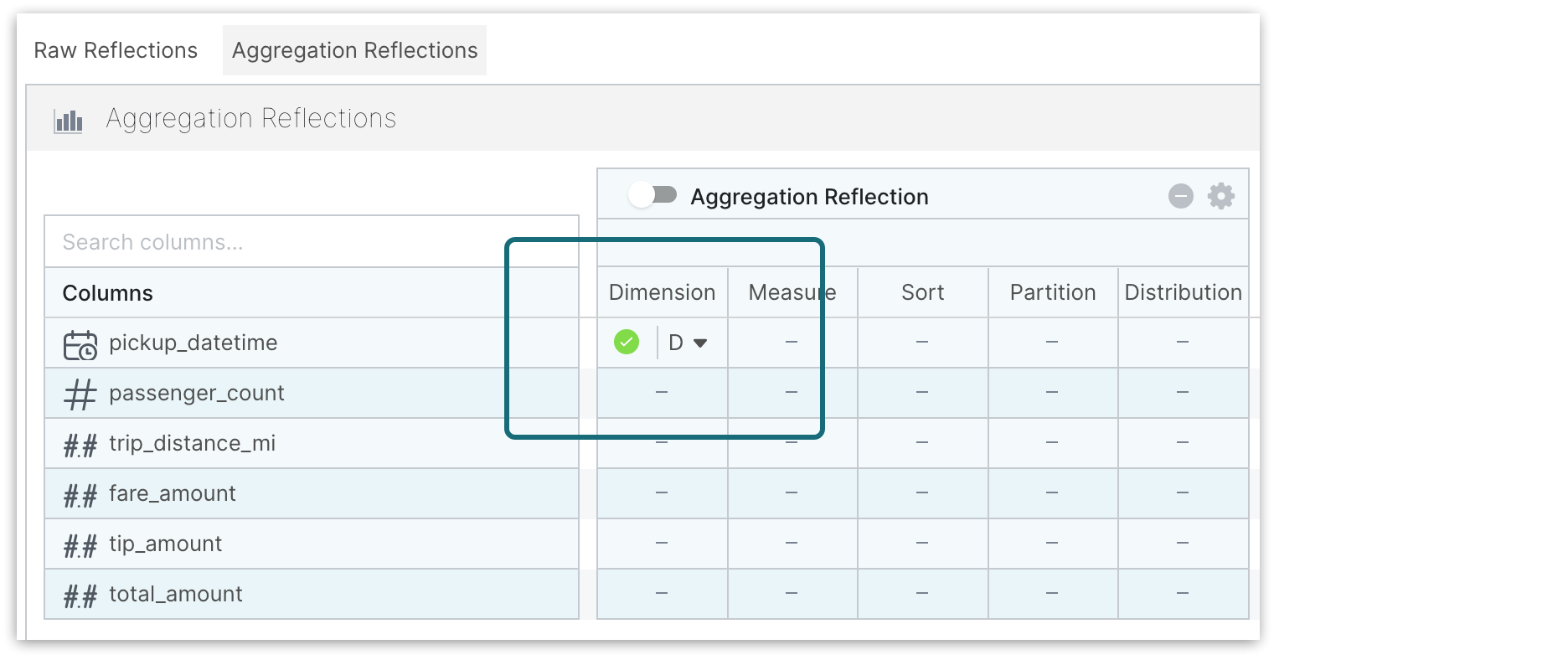

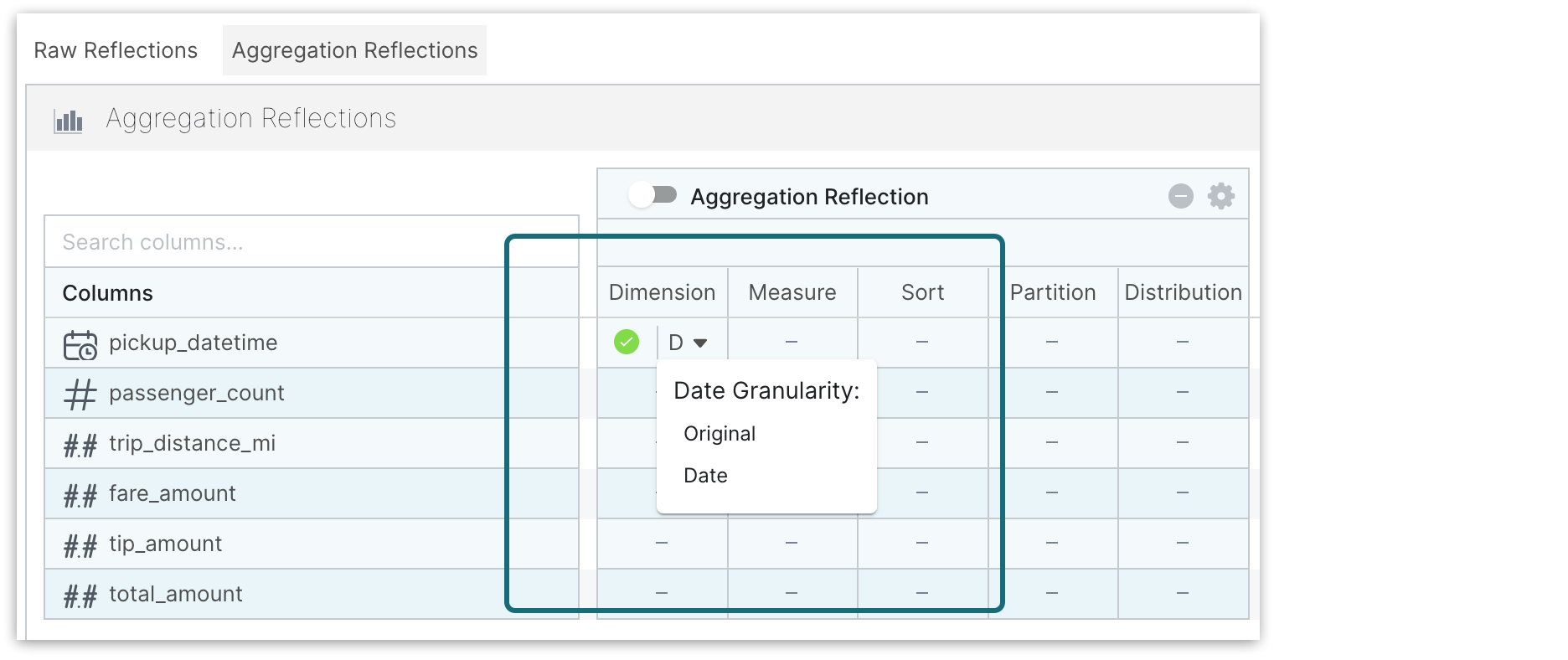

When creating or editing the aggregation Reflection in the Advanced View, as described in Creating and Editing Reflections, follow these steps:

- Set the base table's timestamp column as a dimension.

- Click the down-arrow next to the green circle.

- Select Date for the date granularity.

Sort Reflections on High-Cardinality Columns

The sort option is useful for optimizing queries that use filters or range predicates, especially on columns with high cardinality. If sorting is enabled, during query execution, Dremio skips over large blocks of records based on filters on sorted columns.

Dremio sorts data during the execution of a query if a Reflection spans multiple nodes and is composed of multiple partitions.

Sorting on more than one column in a single data Reflection typically does not improve read performance significantly and increases the costs of maintenance tasks.

For workloads that need sorting on more than one column, consider creating multiple Reflections, each being sorted on a single column.

Create Reflections from Joins that are Based on Joins from Multiple Queries

Joins between tables, views, or both tend to be expensive. You can reduce the costs of joins by performing them only when building and refreshing Reflections.

As an administrator, you can identify a group of queries that use similar joins. Then, you can create a general query that uses a join that is based on the similar joins, but does not include any additional predicates from the queries in the group. This generic query can serve as the basis of a raw Reflection, an aggregation Reflection, or both.

For example, consider the following three queries which use similar joins on views A, B and C:

Three queries with joins on views A, B, and CSELECT a.col1,

b.col1,

c.col1

FROM a

JOIN b

ON (a.col4 = b.col4)

JOIN c

ON (c.col5 = a.col5)

WHERE a.size = 'M' AND

a.col3 > '2001-01-01' AND

b.col3 IN (

'red',

'blue',

'green');

SELECT a.col1,

a.col2,

c.col1,

COUNT(b1)

FROM a

JOIN b

ON (a.col4 = b.col4)

JOIN c

ON (c.col5 = a.col5)

WHERE a.size = 'M' AND

b.col2 < 10 AND

c.col2 > 2

GROUP BY a.col1,

a.col2,

c.col1;

SELECT a.col1,

b.col2

FROM a

JOIN b

ON (a.col4 = b.col4)

JOIN c

ON (c.col5 = a.col5)

WHERE c.col1 = 123;

You can write and run this generic query to create a raw Reflection to accelerate all three original queries:

Create a Reflection to accelerate three queriesSELECT a.col1,

a.col2,

a.col3,

b.col1,

b.col2,

b.col3,

c.col1,

c.col2

FROM a

JOIN b

ON (a.col4 = b.col4)

join c

ON (c.col5 = a.col5);