Pillar 2: Performance Efficiency

Dremio Cloud is a powerful massively parallel processing (MPP) platform that can process terabyte-scale datasets. To get the best performance from your Dremio Cloud environment, follow these design principles and best practices for implementation.

Dimensions of Performance Optimization

When optimizing Dremio Cloud, several factors can affect workload and project performance. Queries submitted to Dremio Cloud must be planned on the control plane before being routed for execution. The resource requirements and degree of optimization of individual queries can vary widely. Those queries can be rewritten and optimized on their own without regard to a larger engine.

Beyond individual queries, executor nodes have individual constraints of memory and CPU. Executors in Dremio Cloud are also part of an engine that groups executors together to process queries in parallel across multiple machines. The size of the engine that a query runs on can affect its performance. To enhance the ability to handle additional queries beyond certain concurrency thresholds, configure replicas for the engine.

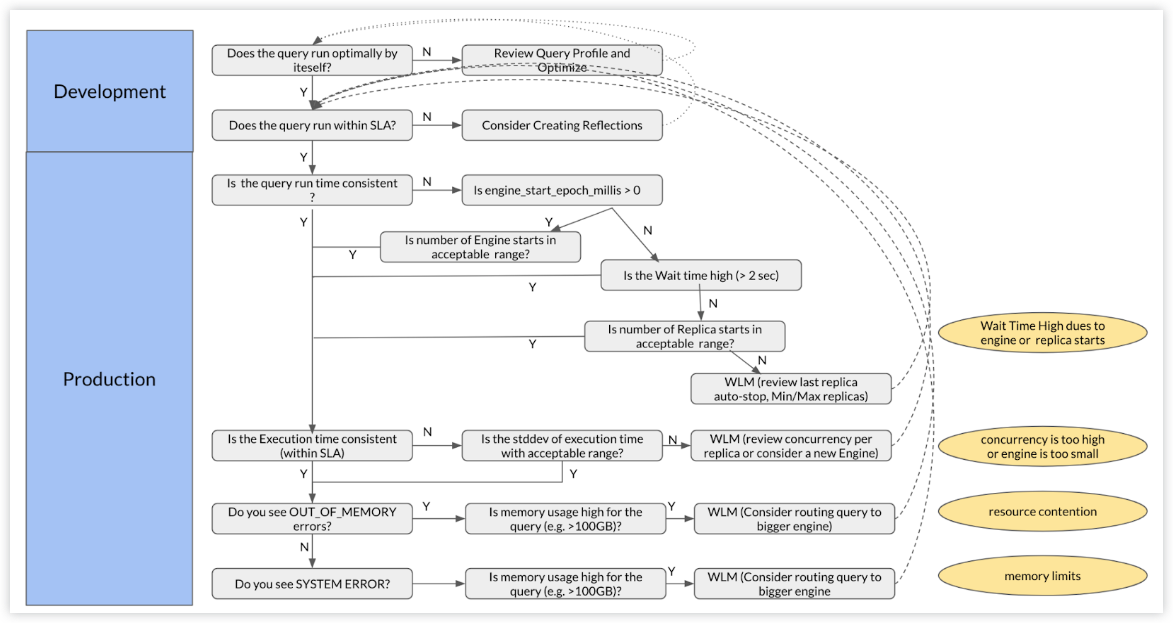

These dimensions of performance optimization can be simplified in the following decision tree, which addresses the most common scenarios. In the decision tree, engine_start_epoch_millis > 0 implies that the engine is down.

Principles

Perform Regular Maintenance

Conduct regular maintenance to ensure that your project is set up for optimal performance and can handle more data, queries, and workloads. Regular maintenance will establish a solid baseline from which you can design and optimize. Dremio Cloud can be set up to automatically optimize and vacuum tables in your Arctic catalog.

Optimize Queries for Efficiency

Before worrying about scaling out your engines, it is important to optimize your semantic layer and queries to be as efficient as possible. For example, if there are partition columns, you should use them. Create sorted or partitioned Reflections. Follow standard SQL writing best practices such as applying functions to values rather than columns in where clauses.

Optimize Engines

Dremio Cloud provides several facilities to allow workload isolation and ensure your queries do not overload the engines. Multiple engines are used to keep some queries from affecting others and concurrency rules are used to buffer queries to prevent overloading any one particular engine.

Best Practices

Design Semantic Layer for Workload Performance

Dremio Cloud’s enterprise-scale semantic layer clearly defines the boundary between your physically stored tables and your logical, governed, and self-service views. The semantic layer seamlessly allows data engineers and semantic data modelers to create views based on tables without having to make copies of the physical data.

Since interactive performance for business users is a key capability of the semantic layer, when appropriate, Dremio Cloud can leverage physically optimized representations of source data known as reflections. When queries are made against views that have Reflections enabled, the query optimizer can accelerate a query by using one or more Reflections to partially or entirely satisfy that query rather than processing the raw data in the underlying data source. Queries do not have to be rewritten to take advantage of Reflections. Instead, Dremio Cloud's optimizer automatically considers Reflection suitability while planning the query.

With Dremio Cloud, you can create layers of views that allow you to present data to business consumers in a format they need while satisfying the security requirements of the organization. Business consumers do not need to worry about which physical locations the data comes from or how the data is physically organized. A layered approach allows you to create sets of views that can be reused many times across multiple projects.

Leveraging Dremio’s layering best practices promotes a more-performant, low-maintenance solution that can provide agility to development teams and business users as well as better control over data.

Improve the Performance of Poor-Performing Queries

Run SELECT * FROM sys.project.jobs_recent to analyze the query history and determine which queries are performing sub-optimally. This allows you to consider a number of factors, including the overall execution time of a query. Identify the 10 longest-running queries to understand why they are taking so long. For example, is it the time taken to read data from the source, lacking CPU cycles, query spilling to disk, query queued at the start, or another issue? Did the query take a long time to plan?

Read Query Performance Analysis and Improvement for details about query performance analysis techniques. This white paper was developed based on Dremio Enterprise, but the content applies equally to Dremio Cloud.

The query history also allows you to focus on planning times. You should also investigate queries to pinpoint high planning time, which could be due to the complexity of the query (which you can address by rewriting the query) or due to many Reflections being considered (which indiciates that too many Reflections are defined in the environment). Read Remove Unused Reflections for more information about identifying redundant Reflections in your Dremio Cloud project.

The query history also allows you to focus on metadata refresh times, which could be due to inline metadata refresh. Read Optimize Metadata Refresh Frequency for more information about checking metadata refresh schedules.

Sometimes, query performance is inconsistent. A query may complete execution in less than 10 seconds in one instance but require 1 minute of execution time in another instance. This is a sign of resource contention in the engine, which can happen in high-volume environments or when too many jobs (including user queries, metadata, and reflections) are running at the same time. We recommend having separate, dedicated engines for metadata refreshes, Reflection refreshes, and user queries to reduce the contention for resources when user queries need run concurrently with refreshes.

For Reflection jobs that require excessive memory, we recommend two Reflection refresh engines of different sizes, routing the Reflections that require excessive memory to the larger engine. This is typically needed for Reflections on views that depend on the largest datasets and can be done with the ALTER TABLE ROUTE REFLECTIONS command.

Read Dremio Profiles to Pinpoint Bottlenecks

Dremio Cloud job profiles contain a lot of fine-grained information about how a query was planned, how the phases of execution were constructed, how the query was actually executed, and the decisions made about whether to use Reflections to accelerate the query.

For each phase of the query that is documented in the job profile, check the start and end times of the phase for an initial indication of the phase in which any bottlenecks are located. After you identify the phase, check the operators of that phase to identify which operator or thread within the operator may have been the specific bottleneck. This information usually helps determine why a query performs sub-optimally so that you can plan improvements. Reasons for bottlenecks and potential improvement options include:

-

High metadata retrieval times from inline metadata refresh indicate that you should revisit metadata refresh settings.

-

High planning time can be caused by too many Reflections or may mean that a query is too complex and should be rewritten.

-

High engine start times indicate that the engine is down. The enqueued time may include replica start time. You may be able to mitigate these issues with minimum replica and last replica auto-stop settings.

-

High setup times in table functions indicate overhead due to opening and closing too many small files. High wait times indicate that there is a network or i/o delay in reading the data. High sleep times in certain phases could indicate CPU contention.

Read Reading Dremio Job Profiles for details about job profile analysis techniques. This white paper was developed based on Dremio Enterprise, but the content applies equally to Dremio Cloud.

Engine Routing and Workload Management

Since the workloads and volumes of queries change over time, reevaluate engine routing settings, engine sizes, engine replicas, and concurrency per replica and adjust as needed to rebalance the proportion of queries that execute concurrently on a replica of an engine.

Right-Size Engines and Executors

Analyze the query history to determine whether a change in the number of executors in your engines is necessary.

When the volume of queries being simultaneously executed by the current set of executor nodes in an engine starts to reach a saturation point, Dremio Cloud exhibits several symptoms. Saturation point is typically manifested as increased sleep time during query execution. Sleep time is incurred when a running query needs to wait for available CPU cycles due to all available CPUs being in operation. Another symptom is an increased number of queries spilling to disk or out-of-memory exceptions.

You can identify these symptoms by analyzing the system table by running SELECT * FROM sys.project.jobs_recent. The resulting table lists query execution times, planning time, engine start times, enqueued times, and job failure.

Failure to address these symptoms can result in increasing query failures, increasing query times, and queries spilling to disk, which in turn lead to a bad end-user experience and poor satisfaction. Spilling to disk ensures that a query succeeds because some of its high-memory-consuming operations are processed via local disks. This reduces the memory footprint of the query significantly, but the trade-off is that the query inevitably runs more slowly.

You can alleviate these issues by adding replicas to the engine and reducing concurrency per replica and adding a larger engine, then altering the engine routing rules to route some of the workload to the new engine. Remember that a query executes on the nodes of a single replica or an engine, not across multiple replicas or multiple engines.

A good reason to create a new engine is when a new workload is introduced to your Dremio Cloud project, perhaps by a new department within an organization, and the queries cause the existing engine setup to degrade in performance. Creating a new engine to isolate the new workload, most likely by creating rules to route queries from users in that organization to the new engine, is a useful way of segregating workloads.

Leverage Reflections to Improve Performance

When developing use cases in Dremio Cloud’s semantic layer, it’s often best to build out the use case iteratively without any Reflections to begin with. Then, as you complete iterations, run the queries and analyze the data in the query history to deduce which queries take the longest to execute and whether any common factors among a set of slow queries are contributing to the slowness.

For example, if a set of five slow queries are each derived from a view that contains a join between two relatively large tables, you might find that adding a raw Reflection on the view that is performing the join helps to speed up all five queries because doing so creates an Apache Iceberg materialization of the join results, which is automatically used to accelerate views derived from the join. This provides the query planning and performance benefits of Apache Iceberg and allows you to partition the Reflection to accelerate queries for which the underlying data weren't initially optimized. This is an important pattern because it means you can leverage a small number of Reflections to speed up many workloads.

Raw Reflections can be useful when you have large volumes of JSON or CSV data. Querying such data requires processing the entire data set, which can be inefficient. Adding a raw Reflection over the JSON or CSV data again allows for an Apache Iceberg representation of that data to be created and opens up all of the planning and performance benefits that come along with it.

Another use of raw Reflections is to offload heavy queries from an operational data store. Often, database administrators do not want their operational data stores (for example, online transaction processing databases) overloaded with analytical queries while they are busy processing billions of transactions. In this situation, you can leverage Dremio Cloud raw Reflections again to create an Apache Iceberg representation of the operational table. When a query comes in that needs the data, Dremio reads the Reflection data instead of going back to the operational source.

Another very important use case that often requires raw Reflections is when you join on-premises data to cloud data. In this situation, retrieving the on-premises data often becomes a bottleneck for queries due to the latency in retrieving data from the source system. Leveraging a default raw Reflection on the view where the data is joined together often yields significant performance gains.

If you have connected Dremio Cloud to client tools that issue different sets of GROUP BY queries against a view, and the GROUP BY statements take too long to process compared to the desired service level agreement, consider adding an aggregation Reflection to the view to satisfy the combinations of dimensions and measures that are submitted from the client tool.

Read Best Practices for Creating Raw and Aggregation Reflections when you are considering how and where to apply Reflections.

Failing to make use of Dremio Cloud Reflections means you could be missing out on significant performance enhancements for some of your poorest-performing queries. However, creating too many Reflections can also have a negative impact on the system as a whole. The misconception is often that more Reflections must be better, but when you consider the overhead in maintaining and refreshing Reflections at intervals, Reflection refreshes can end up stealing valuable resources from your everyday workloads, especially if you have not created a dedicated Reflection refresh engine.

Where possible, organize your queries by pattern. The idea is to create as few Reflections as possible to service as many queries as possible, so finding points in the semantic tree through which many queries go can help you accelerate a larger number of queries. The more Reflections you have that may be able to accelerate the same query patterns, the longer the planner takes to evaluate which Reflection is best suited for accelerating the query being planned.

Optimize Metadata Refresh Performance

Add a dedicated metadata refresh engine to your Dremio Cloud project. This ensures that all metadata refresh activities for Parquet, Optimized Row Columnar (ORC), and Avro datasets that are serviced by executors are completed in isolation from any other workloads and prevents problems with metadata refresh workloads taking CPU cycles and memory away from business-critical workloads. This gives the refreshes have the best chance of finishing in a timely manner.