Monitoring

As an administrator, you can monitor logs, usage, system telemetry, jobs, and Dremio nodes.

As the Dremio Shared Responsibility Models outline, monitoring is a shared responsibility between Dremio and you. The Shared Responsibility Models lay out Dremio's responsibilities for providing monitoring technologies and logs and your responsibilities for implementation and use.

Logs

Logs are primarily for troubleshooting issues and monitoring the health of the deployment.

By default, Dremio uses the following locations to write logs:

- Tarball -

<DREMIO_HOME>/log - RPM -

/var/log/dremio - Kubernetes -

/opt/dremio/log

Log Types

| Log Type | Description |

|---|---|

| Audit | The audit.json file tracks all activities that users perform within Dremio. For details, see Audit Logging. |

| System | The following system logs are enabled by default:

|

| Query | Query logging is enabled by default. The queries.json file contains the log of completed queries; it does not include queries currently in planning or execution. You can retrieve the same information that is in queries.json using the sys.jobs_recent system table. Query logs include the following information:

|

| Warning | The hive.deprecated.function.warning.log file contains warnings for Hive functions that have been deprecated. To resolve warnings that are listed in this file, replace deprecated functions with a supported function. For example, to resolve a warning that mentions NVL, replace NVL with COALESCE. |

Retrieving Logs from the Dremio Console Enterprise

Retrieve logs for Kubernetes deployments in the Dremio console at Settings > Support > Download Logs.

Prerequisites

- You must be using Dremio 25.1+. Log collection is powered by Dremio Diagnostics Collector (DDC).

- You must have the EXPORT DIAGNOSTICS privilege to view Download Logs options in Settings > Support.

Downloading Logs

To download logs:

- In the Dremio console, navigate to Settings > Support > Download Logs and click Start collecting data.

You may store a maximum of three log bundles. Delete log bundles as needed to start a new log collection if you reach the maximum.

We recommend the default Light collection, which provides 7 days of logs and completed queries in the queries.json file, for troubleshooting most issues. For more complex issues, select the Standard collection, which provides 7 days of logs and 28 days of completed queries in the queries.json file.

- When Dremio completes log collection, the log bundle appears in a list below Start collecting data. To download a log bundle, click Download next to the applicable bundle. Log bundles are available to download for 24 hours.

Logging in Kubernetes

By default, all logs are written to a persisted volume mounted at /opt/dremio/log.

To disable logging, set writeLogsToFile: false in the values-overrides.yaml configuration file either globally or individually for each coordinator and executor parent. For more information, see Configuring Your Values.

Using the Container Console

All logs are written to the container's console (stdout) simultaneously. These logs can be monitored using a kubectl command:

kubectl logs [-f] [container-name]

Use the -f flag to continuously print new log entries to your terminal as they are generated.

You can also write logs to a file on disk in addition to stdout. Read Writing Logs to a File for details.

Using the AKS Container

Azure provides integration with AKS clusters and Azure Log Analytics to monitor container logs. This is a standard practice that puts infrastructure in place to aggregate logs from containers into a central log store to analyze them.

AKS log monitoring is useful for the following reasons:

- Monitoring logs across lots of pods can be overwhelming.

- When a pod (for example, a Dremio executor) crashes and restarts, only the logs from the last pod are available.

- If a pod is crashing regularly, the logs are lost, which makes it difficult to analyze the reasons for the crash.

For more information regarding AKS, see Azure Monitor features for Kubernetes monitoring.

Enabling Log Monitoring

You can enable log monitoring when creating an AKS cluster or after the cluster has been created.

Once logging is enabled, all your container stdout and stderr logs are collected by the infrastructure for you to analyze.

- While creating an AKS cluster, enable container monitoring. You can use an existing Log Analytics workspace or create a new one.

- In an existing AKS cluster where monitoring was not enabled during creation, go to Logs on the AKS cluster and enable it.

Viewing Container Logs

To view all the container logs:

- Go to Monitoring > Logs.

- Use the filter option to see the logs from the containers that you are interested in.

Usage

Monitoring usage across your cluster makes it easier to observe patterns, analyze the resources being consumed by your data platform, and understand the impact on your users.

Catalog Usage Enterprise

Go to Settings > Monitor to view your catalog usage. You must be a member of the ADMIN role to access the Monitor page. When you open the Monitor page, you are directed to the Catalog Usage tab by default where you can see the following metrics:

-

Top 10 most queried datasets and how often the jobs on the dataset were accelerated

-

Top 10 most queried spaces and source folders

A source can be listed in the top 10 most queried spaces and source folders if the source contains a child dataset that was used in the query (for example, postgres.accounts). Queries of datasets in sub-folders (for example, s3.mybucket.iceberg_table) are classified by the sub-folder and not the source.

All datasets are assessed in the metrics on the Monitor page except for datasets in the system tables, the information schema, and home spaces.

The metrics on the Monitor page analyze only user queries. Refreshes of data Reflections and metadata refreshes are excluded.

Jobs Enterprise

Go to Settings > Monitor > Jobs to open the Jobs tab. You must be a member of the ADMIN role to access the Monitor page. The Jobs tab shows an aggregate view of the following metrics for the jobs that are running on your cluster:

-

Total job count over the last 24 hours and the relative rate of failure/cancelation

-

Top 10 most active users based on the number of jobs they ran

-

Total jobs accelerated, total job time saved, and average job speedup from Autonomous Reflections over the past month.

-

Total number of jobs accelerated by autonomous and manual Reflections over time

-

Total number of completed and failed jobs over time

-

Jobs (completed and failed) grouped by the queue they ran on

-

Percentage of time that jobs spent in each state

-

Top 10 longest running jobs

To view all jobs and the details of specific jobs, see Viewing Jobs.

Resources Enterprise

Go to Settings > Monitor > Resources to open the Resources tab. You must be a member of the ADMIN role to access the Monitor page. The Resources tab shows an aggregate view of the following metrics for the jobs and nodes running on your cluster:

-

Percentage of CPU and memory utilization for each coordinator and executor node

-

Top 10 most CPU and memory intensive jobs

-

Number of running executors

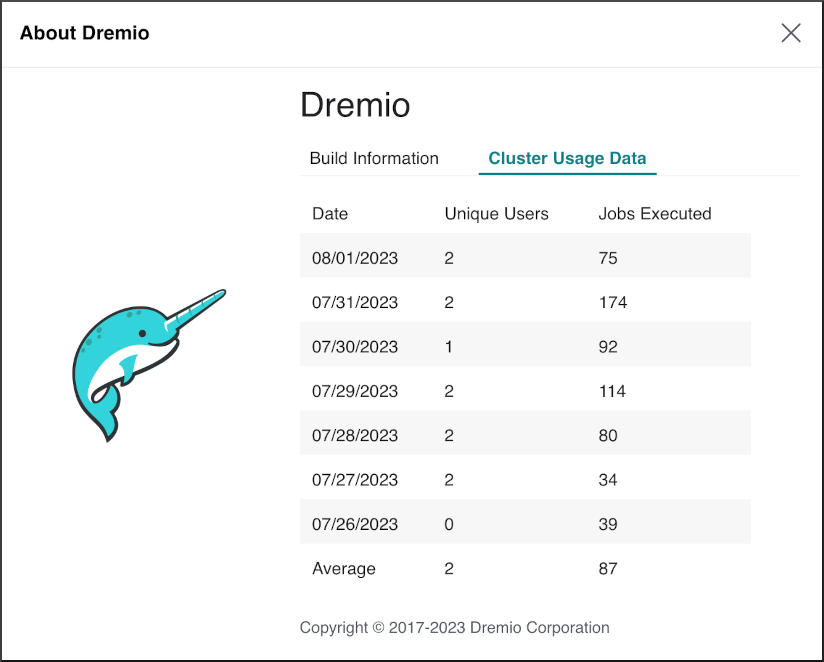

Cluster Usage

Dremio displays the number of unique users who executed jobs on that day and the number of executed jobs.

-

Hover over

in the side navigation bar.

in the side navigation bar. -

Click About Dremio in the menu.

-

Click the Cluster Usage Data tab.

System Telemetry

Dremio exposes system telemetry metrics in Prometheus format by default. It is not necessary to configure an exporter to collect the metrics. Instead, you can specify the host and port number where metrics are exposed in the dremio.conf file and scrape the metrics with any Prometheus-compliant tool.

To specify the host and port number where metrics are exposed, add these two properties to the dremio.conf file:

services.web-admin.host: set to the desired host address (typically0.0.0.0or the IP address of the host where Dremio is running).services.web-admin.port: set to any desired value that is greater than1024.

For example:

Example host and port settings in dremio.confservices.web-admin.host: "127.0.0.1"

services.web-admin.port: 9090

Restart Dremio after you update the dremio.conf file to make sure your changes take effect.

Access the exported Dremio system telemetry metrics at http://<yourHost>:<yourPort>/metrics.

For more information about Prometheus metrics, read Types of Metrics in the Prometheus documentation.