Profiles

Visual profiles and raw profiles are available for jobs that have run queries.

Visual Profiles

You can view the operations in visual profiles to diagnose performance or cost issues and to see the results of changes that you make, either to queries themselves or their environment, to improve performance or reduce costs.

A query profile details the plan that Dremio devised for running a query and shows statistics from the query's execution. A visual representation of a query profile is located on the Visual Profile tab. This visual profile consists of operators that are arranged as a tree, where each operator has one or two upstream operators that represent a specific action, such as a table scan, join, or sort. At the top of the tree, a single root operator represents the query results, and at the bottom, the leaf operators represent scan or read operations from datasets.

Data processing begins with the reading of datasets at the bottom of the tree structure, and data is sequentially processed up the tree. A query plan can have many branches, and each branch is processed separately until a join or other operation connects it to the rest of the tree.

Phases

A query plan is composed of query phases (also called major fragments), and each phase defines a series of operations that are running in parallel. A query phase is depicted by the same colored boxes that are grouped together in a visual profile.

Within the query phases are multiple, single-threaded instances (also called minor fragments) running in parallel. Each thread is processing a different set of data through the same series of operations, and this data is exchanged from one phase to another. The number of threads for each operator can be found in the Details section (right panel) of a visual profile.

Use Visual Profiles

To navigate to the visual profile for a job:

- Click

in the side navigation bar.

in the side navigation bar. - On the Jobs page, click a job that you would like to see the visual profile for.

- At the top of the next page, click the Visual Profile tab to open.

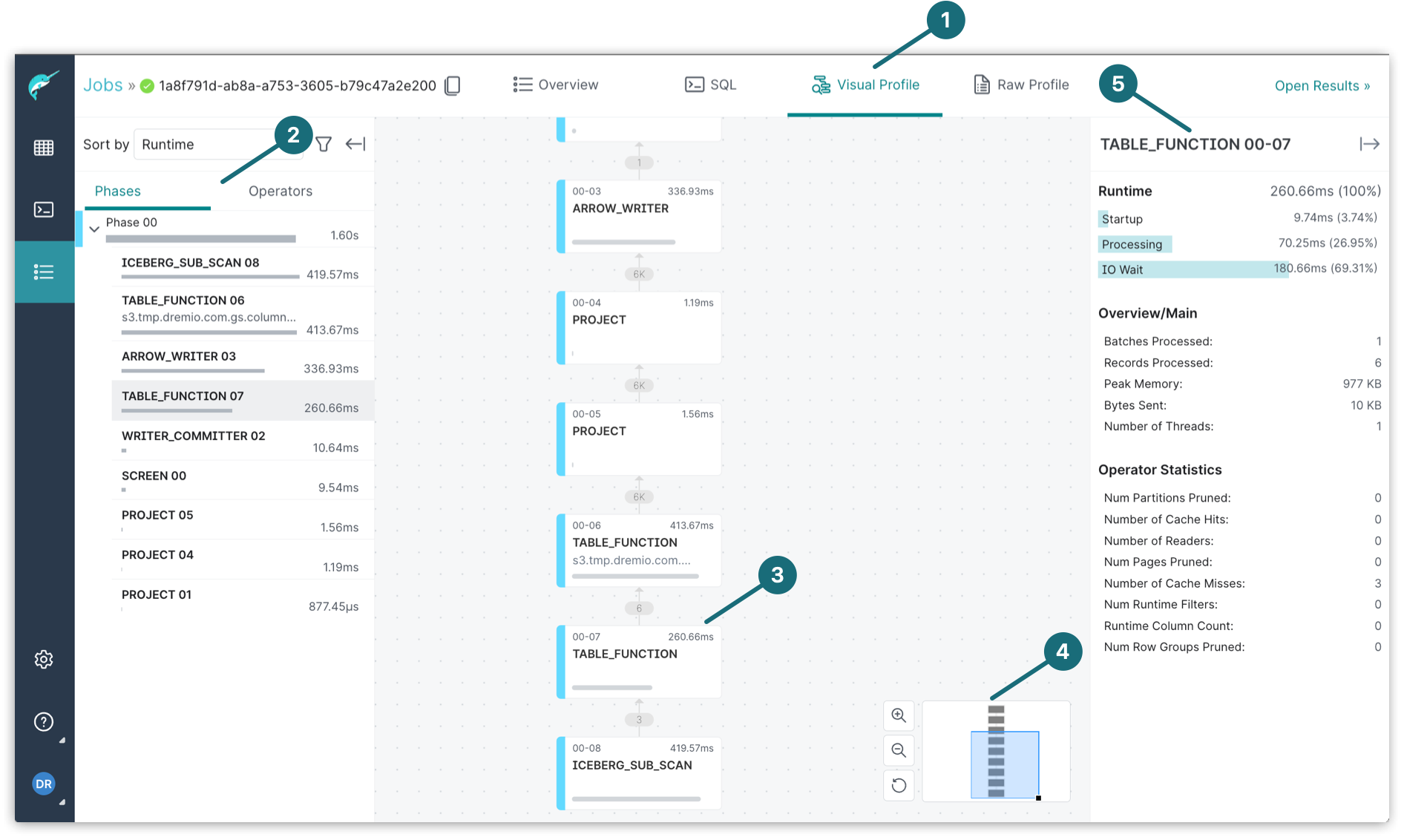

The main components of a visual profile are shown below:

| Location | Description |

|---|---|

| 1 | The Visual Profile tab shows a visual representation of a query profile. |

| 2 | The left panel is where you can view the phases of the query execution or single operators, sorting them by runtime, total memory used, or records produced. Operators of the same color are within the same phase. Clicking the Collapse  hides the left panel from view. hides the left panel from view. |

| 3 | The tree graph allows you to select an operator and find out where it is in relation to the rest of the query plan. |

| 4 | The zoom controls the size of the tree graph so it's easier for you to view. |

| 5 | The right panel shows the details and statistics about the selected operator. Clicking the Collapse  hides the right panel from view. hides the right panel from view. |

Use Cases

Improve the Performance of Queries

You may notice that a query is taking more time than expected and want to know if something can be done to reduce the execution time. By viewing its visual profile, you can, for example, quickly find the operators with the highest processing times.

You might decide to try making simple adjustments to cause Dremio to choose a different plan. Some of the possible adjustments include:

- Adding a filter on a partition column to reduce the amount of data scanned

- Changing join logic to avoid expanding joins (which return more rows than either of the inputs) or nested-loop joins

- Creating a Reflection to avoid some of processing-intensive work done by the query

Reduce Query-Execution Costs

If you are an administrator, you may be interested in tuning the system as a whole to support higher concurrency and lower resource usage across the system, because you want to identify the most expensive queries in the system and then see what can be done to lower the cost of these queries. Such an investigation is often important even if individual users are happy with the performance of their own queries.

On the Jobs page, you can use the columns to find the queries with the highest cost, greatest number of rows scanned, and more. You can then study the visual profiles for these queries, identifying system or data problems, and mismatches between how data is stored and how these queries retrieve it. You can try repartitioning data, modifying data types, sorting, creating views, creating Reflections, and other changes.

Raw Profiles

Click Raw Profile to open a raw profile of the job in a separate dialog, which includes a job summary, state durations, threads, resource allocation, operators, visualized plan, acceleration, and other details.

A raw profile is a UI-generated profile that is a subset of the data that you can download and provides a summary of metrics collected for each executed query that can be used to monitor and analyze query performance.

To navigate to a raw profile:

- Click

in the side navigation bar to open the Jobs page.

in the side navigation bar to open the Jobs page. - On the Jobs page, click a job that you would like to see the raw profile for.

- At the top of the next page, click the Raw Profile tab to open a raw profile of the job in a separate dialog. The associated raw profile dialog shows a variety of information for review.

Views

Within the Raw Profile dialog, you can analyze the Job Metrics based on the following views:

| View | Description |

|---|---|

| Query | Shows the selected query statement and job metrics. See if your SQL query is what you were expecting and the query is run against the source data. |

| Visualized Plan | Shows a visualized diagram and job metrics. This view is useful in understanding the flow of the query and for analyzing out of memory issues and incorrect results. The detailed visualized pan diagram is always read from the bottom up. |

| Planning | Shows planning metrics, query output schema, non default options, and job metrics. This view shows how query planning is executed, because it provides statistics about the actual cost of the query operations in terms of memory, input/output, and CPU processing. You can use this view to identify which operations consumed the majority of the resources during a query and to address the cost-intensive operations. In particular, the following information is useful:

|

| Acceleration | Shows Reflection outcome, canonicalized user query alternatives, Reflection details, and job metrics.

|

| Error | (If applicable) Shows information about an error. The Failure Node is always the coordinator node and the server name inside the error message is the actual affected node. |

Job Metrics

Each view displays the following metrics:

Job Summary

The job summary information includes:

- State

- Coordinator

- Threads

- Command Pool Wait

- Total Query Time

- # Joins in user query

- # Joins in final plan

- Considered Reflections

- Matched Reflections

- Chosen Reflections

Time in UTC

The Time in UTC section lists the job's start and end time, in UTC format.

State Durations

The State Durations section lists the length of time (in milliseconds) for each of the job states:

- Pending

- Metadata Retrieval

- Planning

- Engine Start

- Queued

- Execution Planning

- Starting

- Running

For descriptions of the job states, see Job States and Statuses.

Context

If you are querying an Iceberg catalog object, the Context section lists the Iceberg catalog and branch that is referenced in the query. Otherwise, the Context section is not populated. Read Iceberg Catalogs in Dremio for more information.

Threads

The Threads section provides an overview table and a major fragment block for each major fragment. Each row in the Overview table provides the number of minor fragments that Dremio parallelized from each major fragment, as well as aggregate time and memory metrics for the minor fragments.

Major fragment blocks correspond to a row in the Overview table. You can expand the blocks to see metrics for all of the minor fragments that were parallelized from each major fragment, including the host on which each minor fragment ran. Each row in the major fragment table presents the fragment state, time metrics, memory metrics, and aggregate input metrics of each minor fragment.

In particular, the following metrics are useful:

- Setup – Time opening and closing of files.

- Waiting – Time waiting on the CPU.

- Blocked on Downstream – Represents completed work whereas the next phase is not ready to accept work.

- Blocked on Upstream – Represents the phase before it is ready to give work though the current phase is not ready.

- Phase Metrics – Displays memory used per node (Phases can run in parallel).

Resource Allocation

The Resource Allocation section shows the following details for managed resources and workloads:

- Engine Name

- Queue Name

- Queue Id

- Query Cost

- Query Type

Nodes

The Nodes section includes host name, resource waiting time, and peak memory.

Operators

The Operators section shows aggregate metrics for each operator within a major fragment that performed relational operations during query execution.

Operator Overview Table

The following table lists descriptions for each column in the Operators Overview table:

| Column Name | Description |

|---|---|

| SqlOperatorImpl ID | The coordinates of an operator that performed an operation during a particular phase of the query. For example, 02-xx-03 where 02 is the major fragment ID, xx corresponds to a minor fragment ID, and 03 is the Operator ID. |

| Type | The operator type. Operators can be of type project, filter, hash join, single sender, or unordered receiver. |

| Min Setup Time, Avg Setup Time, Max Setup Time | In general, the time spent opening and closing files. Specifically, the minimum, average, and maximum amount of time spent by the operator to set up before performing the operation. |

| Min Process Time, Avg Process Time, Max Process Time | The shortest amount of time the operator spent processing a record, the average time the operator spent in processing each record, and the maximum time that the operator spent in processing a record. |

| Wait (min, avg, max) | In general, the time spent waiting on Disk I/O. These fields represent the minimum, average, and maximum times spent by operators waiting on disk I/O. |

| Avg Peak Memory | Represents the average of the peak direct memory allocated across minor fragments. Relates to the memory needed by operators to perform their operations, such as hash join or sort. |

| Max Peak Memory | Represents the maximum of the peak direct memory allocated across minor fragments. Relates to the memory needed by operators to perform their operations, such as hash join or sort. |

Operator Block

The Operator Block shows time and memory metrics for each operator type within a major fragment. Examples of operator types include:

- SCREEN

- PROJECT

- WRITER_COMMITTER

- ARROW_WRITER

The following table describes each column in the Operator Block:

| Column Name | Description |

|---|---|

| Thread | The coordinate ID of the minor fragment on which the operator ran. For example, 04-03-01 where 04 is the major fragment ID, 03 is the minor fragment ID, and 01 is the Operator ID. |

| Setup Time | The amount of time spent by the operator to set up before performing its operation. This includes run-time code generation and opening a file. |

| Process Time | The amount of time spent by the operator to perform its operation. |

| Wait Time | The cumulative amount of time spent by an operator waiting for external resources. such as waiting to send records, waiting to receive records, waiting to write to disk, and waiting to read from disk. |

| Max Batches | The maximum number of record batches consumed from a single input stream. |

| Max Records | The maximum number of records consumed from a single input stream. |

| Peak Memory | Represents the peak direct memory allocated. Relates to the memory needed by the operators to perform their operations, such as hash join and sort. |

| Host Name | The hostname of the Executor the minor fragment is running on. |

| Record Processing Rate | The rate at which records in the minor fragment are being processed. Combined with the Host Name, the Record Processing Rate can help find hot spots in the cluster, either from skewed data or a noisy query running on the same cluster. |

| Operator State | The status of the minor fragment. |

| Last Schedule Time | The last time at which work related to the minor fragment was scheduled to be executed. |

Operator blocks also contain three drop-down menus: Operator Metrics, Operator Details, and Host Metrics. Operator Metrics and Operator Details are unique to the type of operator and provide more detail about the operation of the minor fragments. Operator Metrics and Operator Details are intended to be consumed by Dremio engineers. Depending on the operator, both can be blank. Host Metrics provides high-level information about the host used when executing the operator.