Apache Iceberg

Apache Iceberg enables Dremio to provide powerful, SQL database-like functionality on data lakes using industry-standard SQL commands. Dremio currently supports Iceberg v2 tables, offering a solid foundation for building and managing data lakehouse tables. Certain features, such as Iceberg native branching and tagging, and the UUID data type, are not yet supported.

For a deeper dive into Apache Iceberg, see:

Benefits of Iceberg Tables

Iceberg tables offer the following benefits over other formats traditionally used in the data lake, including:

- Schema evolution: Supports add, drop, update, or rename column commands with no side effects or inconsistency.

- Partition evolution: Facilitates the modification of partition layouts in a table, such as data volume or query pattern changes without needing to rewrite the entire table.

- Transactional consistency: Helps users avoid partial or uncommitted changes by tracking atomic transactions with atomicity, consistency, isolation, and durability (ACID) properties.

- Increased performance: Ensures data files are intelligently filtered for accelerated processing via advanced partition pruning and column-level statistics.

- Time travel: Allows users to query any previous versions of the table to examine and compare data or reproduce results using previous queries.

- Automatic optimization: Optimize query performance to maximize the speed and efficiency with which data is retrieved.

- Version rollback: Corrects any discovered problems quickly by resetting tables to a known good state.

Clustering

Clustered Iceberg tables in Dremio makes use of Z-Ordering to provide a more intuitive data layout with comparable or better performance characteristics to Iceberg partitioning.

Iceberg clustering sorts individual records in data files based on the clustered columns provided in the CREATE TABLE or ALTER TABLE statement. The data file level clustering of data allows Parquet metadata to be used in query planning and execution to reduce the amount of data scanned as part of the query. In addition, clustering eliminates common problems with partitioned data, such as over-partitioned tables and partition skew.

Clustering provides a general-purpose file layout that enables both efficient reads and writes. However, you may not see immediate benefits from clustering if the tables are too small.

A common pattern is to choose clustered columns that are either primary keys of the table or commonly used for query filters. These column choices will effectively filter the working dataset, thereby improving query times. Clustered columns are ordered in precedence of filtering or cardinality with the most commonly queried columns of highest cardinality first.

Supported Data Types for Clustered Columns

Dremio Iceberg clustering supports clustered columns of the following data types:

DECIMALINTBIGINTFLOATDOUBLEVARCHARVARBINARYDATETIMETIMESTAMP

Automated table maintenance eliminates the need to run optimizations for clustered Iceberg tables manually, although if using manual optimization, its behavior differs based on whether or not tables are clustered.

Iceberg Table Management

Learn how to manage Iceberg tables in Dremio with supported Iceberg features such as expiring snapshots and optimizing tables.

Vacuum

Each write to an Iceberg table creates a snapshot of that table, which is a timestamped version of the table. As snapshots accumulate, data files that are no longer referenced in recent snapshots take up more and more storage. Additionally, the more snapshots a table has, the larger its metadata becomes. You can expire older snapshots to delete the data files that are unique to them and to remove them from table metadata. It is recommended that you expire snapshots regularly. For the SQL command to expire snapshots, see VACUUM TABLE.

Sometimes failed SQL commands may leave orphan data files in the table location that are no longer referenced by any active snapshot of the table. You can remove orphan files in the table location by running remove_orphan_files. See VACUUM TABLE for details.

Optimization

Dremio provides automatic optimization, which automatically maintains Iceberg tables in the Open Catalog using a dedicated engine configured by Dremio. However, for immediate optimization, you can use the OPTIMIZE TABLE SQL command and route jobs to specific engines in your project by creating a routing rule with the query_label() condition and the OPTIMIZATION label. For more information, see Workload Management.

When optimizing tables manually, you can use:

-

FOR PARTITIONSto optimize selected partitions. -

MIN_INPUT_FILESto consider the minimum number of qualified files needed for compaction. Delete files count towards determining whether the minimum threshold is reached.

Iceberg Catalogs in Dremio

The Apache Iceberg table format uses an Iceberg catalog service to track snapshots and ensure transactional consistency between tools. For more information about how Iceberg catalogs and tables work together, see Iceberg Catalog.

Currently, Dremio does not support the Amazon DynamoDB nor JDBC catalogs. For additional information on limitations of Apache Iceberg as implemented in Dremio, see Limitations.

The catalog is the source of truth for the current metadata pointer for a table. You can use Dremio's Open Catalog as a catalog for all your tables. You can also add external Iceberg catalogs as a source in Dremio, which allows you to work with Iceberg tables that are not cataloged in Dremio's Open Catalog.The list of Iceberg catalogs that can be added as a source can be found here:

- AWS Glue Data Catalog

- Iceberg REST Catalog

- Snowflake Open Catalog

- Unity Catalog

Once a table is created with a specific catalog, you must continue using that same catalog to access the table. For example, if you create a table using AWS Glue as the catalog, you cannot later access that table by adding its S3 location as a source in Dremio. You must add the AWS Glue Data Catalog as a source and access the table through it.

Rollbacks

When you modify an Iceberg table using data definition language (DDL) or data manipulation language (DML), each change creates a new snapshot in the table's metadata. The Iceberg catalog tracks the current snapshot through a root pointer.

You can use the ROLLBACK TABLE SQL command to roll back a table by redirecting this pointer to an earlier snapshot—useful for undoing recent data errors. Rollbacks can target a specific timestamp or snapshot ID.

When you perform a rollback, Dremio creates a new snapshot identical to the selected one. For example, if a table has snapshots (1) first_snapshot, (2) second_snapshot, and (3) third_snapshot, rolling back to first_snapshot restores the table to that state while preserving all snapshots for time travel queries.

SQL Command Compatibility

Dremio supports running most combinations of concurrent SQL commands on Iceberg tables. To take a few examples, two INSERT commands can run concurrently on the same table, as can two SELECT commands, or an UPDATE and an ALTER command.

However, Apache Iceberg’s Serializable Isolation level with non-locking table semantics can result in scenarios in which write collisions occur. In these circumstances, the SQL command that finishes second fails with an error. Such failures occur only for a subset of combinations of two SQL commands running concurrently on a single Iceberg table.

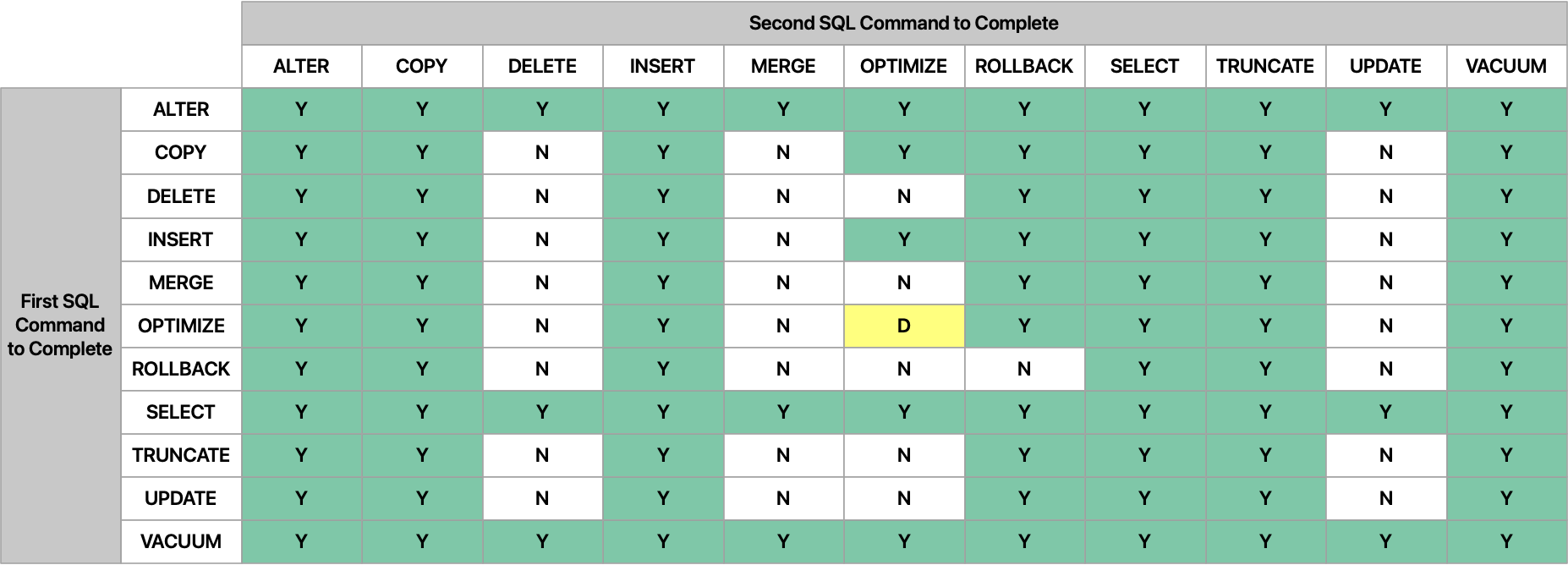

This table shows which types of SQL commands can and cannot run concurrently with other types on a single Iceberg table:

- Y: Running these two types of commands concurrently is supported.

- N: Running these two types of commands concurrently is not supported. The second command to complete fails with an error.

- D: Running two

OPTIMIZEcommands concurrently is supported if they run against different table partitions.

Table Properties

The following Apache Iceberg table properties are supported in Dremio. You can use these properties to configure aspects of Apache Iceberg tables:

| Property | Description | Default |

|---|---|---|

| commit.manifest.target-size-bytes | The target size when merging manifest files. | 8 MB |

| commit.status-check.num-retries | The number of times to check whether a commit succeeded after a connection is lost before failing due to an unknown commit state. | 3 |

| compatibility.snapshot-id-inheritance.enabled | Enables committing snapshots without explicit snapshot IDs. | false (always true if the format version is > 1) |

| format-version | The table’s format version defined in the Spec. Options: 1 or 2 | 2 |

| history.expire.max-snapshot-age-ms | The maximum age (in milliseconds) of snapshots to keep as expiring snapshots. | 432000000 (5 days) |

| history.expire.min-snapshots-to-keep | The default minimum number of snapshots to keep as expiring snapshots. | 1 |

| write.delete.mode | The table’s method for handling row-level deletes. See Row-Level Changes on the Lakehouse: Copy-On-Write vs. Merge-On-Read in Apache Iceberg for more information on which mode is best for your table’s DML operations. Options: copy-on-write or merge-on-read | copy-on-write |

| write.merge.mode | The table’s method for handling row-level merges. See Row-Level Changes on the Lakehouse: Copy-On-Write vs. Merge-On-Read in Apache Iceberg for more information on which mode is best for your table’s DML operations. Options: copy-on-write or merge-on-read | copy-on-write |

| write.metadata.compression-codec | The Metadata compression codec. Options: none or gzip | none |

| write.metadata.delete-after-commit.enabled | Controls whether to delete the oldest tracked version metadata files after commit. | false |

| write.metadata.metrics.column.col1 | Metrics mode for column col1 to allow per-column tuning. Options: none, counts, truncate(length), or full | (not set) |

| write.metadata.metrics.default | Default metrics mode for all columns in the table. Options: none, counts, truncate(length), or full | truncate(16) |

| write.metadata.metrics.max-inferred-column-defaults | Defines the maximum number of top-level columns for which metrics are collected. The number of stored metrics can be higher than this limit for a table with nested fields. | 100 |

| write.metadata.previous-versions-max | The maximum number of previous version metadata files to keep before deleting after commit. | 100 |

| write.parquet.compression-codec | The Parquet compression codec. Options: zstd, gzip, snappy, or uncompressed | zstd |

| write.parquet.compression-level | The Parquet compression level. Supported for gzip and zstd. | null |

| write.parquet.dict-size-bytes | The Parquet dictionary page size (in bytes). | 2097152 (2 MB) |

| write.parquet.page-row-limit | The Parquet page row limit. | 20000 |

| write.parquet.page-size-bytes | The Parquet page size (in bytes). | 1048576 (1 MB) |

| write.parquet.row-group-size-bytes | Parquet row group size. Dremio uses this property as a target file size since it writes one row-group per Parquet file. Ignores the store.parquet.block-size and dremio.iceberg.optimize.target_file_size_mb support keys. | 134217728 (128 MB) |

| write.summary.partition-limit | Includes partition-level summary stats in snapshot summaries if the changed partition count is less than this limit. | 0 |

| write.update.mode | The table’s method for handling row-level updates. See Row-Level Changes on the Lakehouse: Copy-On-Write vs. Merge-On-Read in Apache Iceberg for more information on which mode is best for your table’s DML operations. Options: copy-on-write or merge-on-read | copy-on-write |

You can configure these properties when you create or alter Iceberg tables.

Dremio uses the Iceberg default value for table properties that are not set. See Iceberg's documentation for the full list of table properties. To view the properties that are set for a table, use the SQL command SHOW TBLPROPERTIES.

In cases where Dremio has a support key for a feature covered by a table property, Dremio uses the table property instead of the support key.

Limitations

The following are limitations with Apache Iceberg as implemented in Dremio:

- Only Parquet file formats are currently supported. Other formats (such as ORC and Avro) are not supported at this time.

- Amazon DynamoDB and JDBC catalogs are currently not supported.

- Unable to use DynamoDB as a lock manager with the Hadoop catalog on Amazon S3.

- Dremio caches query plans for recently executed statements to improve query performance. However, running a rollback query using a snapshot ID invalidates all cached query plans that reference the affected table.

- If a table is running DML operations when a rollback query using a snapshot ID executes, the DML operations can fail to complete because the current snapshot ID has changed to a new value due to the rollback query. However,

SELECTqueries that are in the midst of executing can be completed. - Clustering keys must be columns in the table. Transformations are not supported.

- You can run only one optimize query at a time on the selected Iceberg table partition.

- The optimize functionality does not support sort ordering.

Related Topics

- Automatic Optimization – Learn how Dremio optimizes Iceberg tables automatically.

- Load Data Into Tables - Load data from CSV, JSON, or Parquet files into existing Iceberg tables.

- SQL Commands – See the syntax of the SQL commands that Dremio supports for Iceberg tables.