Load Data into Tables

Dremio supports three main methods for loading data into Apache Iceberg tables. You can copy data from CSV, JSON, or Parquet files directly into an existing table, set up autoingest pipes to continuously ingest new data as it arrives, or upload local files as Iceberg tables in a catalog.

Copy Data into Tables

Querying large datasets stored in CSV or JSON files can be inefficient. For faster performance and scalability, load your data into Apache Iceberg tables, which use the columnar Parquet format for optimized storage and retrieval. Even queries on Parquet files perform faster when the data is stored in Iceberg tables, enabling you to take full advantage of Dremio’s Iceberg capabilities.

Prerequisites

- At least one column in the target table must match a column in every data file.

- Files cannot contain duplicate column names.

- CSV data files must have a header line at the start of the file.

- Supported storage locations: Azure Storage or Amazon S3.

Copy Operation

Use the COPY INTO SQL command to load data from CSV, JSON, and Parquet files into existing Iceberg tables. The operation matches columns in the files to columns in the target table and loads data accordingly.

The copy operation supports Iceberg tables in the Open Catalog, AWS Glue Data Catalog, and catalogs that implement the Iceberg REST Catalog specification.

The copy operation verifies that at least one column in the target table matches a column represented in the data files. It then follows these rules:

- If a match is found, the values in the data files are loaded into the column or columns.

- If additional non-matching columns are present in the data files, the values in these columns are not loaded.

- If additional non-matching columns are present in the target table, the operation inserts NULL values into these columns.

- If no column in the target table matches any column represented in the data files, the operation fails.

The copy operation ignores case when comparing column names.

Type Coercion

For a list of the type coercions used by the copy operation when copying data from CSV and JSON files, see Type Coercion When Copying Data from CSV or JSON Files Into Apache Iceberg Tables.

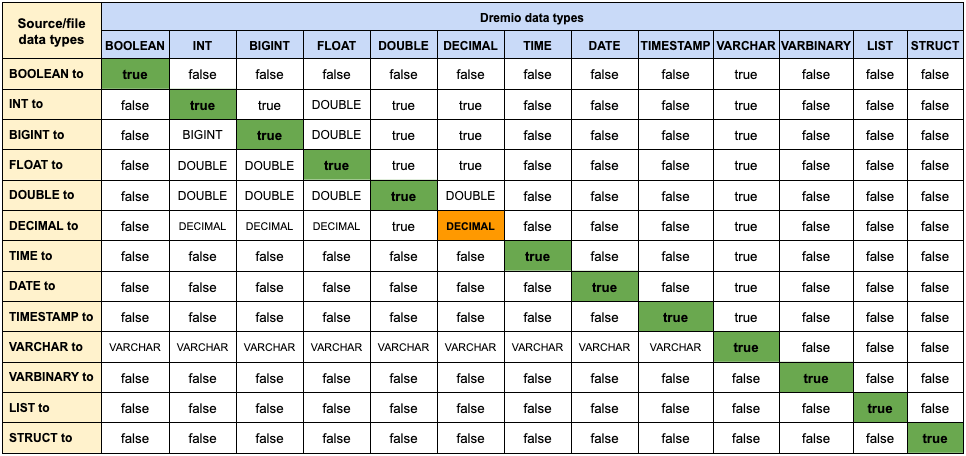

For the type coercions used by the copy operation when copying data from Parquet files, refer to this table:

Column Nullability Constraints

A column's nullability constraint defines whether the column can contain NULL values, because you can specify that each column is either:

NULL— AllowsNULLvalues, which is useful for optional or unknown data.NOT NULL— Requires a value for every row;NULLvalues are not allowed.

When running COPY INTO with ON_ERROR set to 'continue' or 'skip_file', the command will not fail on nullability conflicts. Instead, it skips the problematic file or record.

However, if ON_ERROR is set to 'abort' (or left unspecified), the command will fail if any row violates the table’s NOT NULL constraints.

Autoingest Data Preview

You can use autoingest pipes to automatically ingest data into Apache Iceberg tables.

Autoingest pipes are objects in Dremio that represent event-driven ingestion pipelines, which collect and load data into a centralized data repository for further analysis and utilization. Event-driven ingestion, or autoingestion, occurs when a new file is added to a specified cloud storage location, which sets off an event in Dremio to copy the new file into an Iceberg table. Dremio automatically ingests the file with micro-batch processing, loading files in small, predefined batches at regular intervals.

Autoingest pipes remove the complexity and operational overhead of setting up, running, and monitoring data pipelines by providing:

-

Single-Command Setup: Dremio provides a streamlined process for setting up and running autoingest pipes. Create an autoingest pipe using the

CREATE PIPESQL command to specify the parameters, and run a cloud-specific CLI command to set up the required infrastructure and connect your cloud storage to Dremio for autoingestion. -

File Deduplication: File deduplication eliminates copies of files and enhances storage utilization. Dremio's autoingest pipes provide file deduplication by ensuring that your pipe loads semantics only once and preventing files with the same name from loading in a given time period (the

DEDUPE_LOOKBACK_PERIOD). -

Event-Based Execution of

COPY INTO: After a new file is added in the source location, an event is sent to Dremio to run aCOPY INTOstatement. Ingested files are processed in micro-batches to optimize user resources and ensure that the data is efficiently loaded into Dremio. -

Execution Monitoring and Error Handling: For common issues that can occur with data pipelines, such as single rows that do not conform to the target table schema or read permissions being revoked on the source, Dremio takes the appropriate action to alert the user and suggest potential solutions.

-

Efficient Batching for Optimal Engine Utilization: When implementing an event-based loading system, users often execute a load command for every file immediately after the file is added to cloud storage. This frequent loading increases the likelihood that Iceberg file sizes will be smaller than the optimal size and the engine will be overutilized. Both of these issues result in higher total cost of ownership because they require running

OPTIMIZE TABLEjobs more frequently to compact Iceberg tables, which uses engine resources inefficiently. Dremio’s autoingest pipes efficiently organize requests into micro-batches that minimize the cost of loading data and maintain acceptable latency in a data lifecycle.

Autoingest pipes can only ingest data from Amazon S3 sources in Dremio.

Upload Local Files

You can upload an individual local file to Dremio if the file is 500 MB or smaller and in CSV, JSON, or Parquet format. During the upload process, Dremio formats the file into an Iceberg table.

To upload a file:

-

In the Dremio console, click

in the side navigation bar to go to the Datasets page.

in the side navigation bar to go to the Datasets page. -

Click Add Data in the bottom left corner of the Datasets page.

-

Upload the file either by:

a. Dragging the file from your local machine and dropping it in the Add Data dialog.

b. Clicking Choose file to upload and navigating to the file on your local machine.

If the file is large, it may take a few moments to upload, depending on your connection speed.

-

(Optional) During the upload process, configure the file settings. For example, configure how the file is delimited.

-

Click Save.

Limits

- Uploaded files are copies of your local file. Updates to your local file are not automatically reflected in Dremio.

- Bulk upload of multiple files is not supported.

Case Sensitivity

Dremio does not support case-sensitive data file names, table names, or column names.

For example, if you have three file names that have the same name, but with different cases (such as, MARKET, Market, and market), Dremio is unable to discern the case differences, resulting in unanticipated data results.

For column names, if two columns have the same name using different cases (such as Trip_Pickup_DateTime and trip_pickup_datetime) exist in the table, one of the columns may disappear when the header is extracted.