Refreshing Reflections

The data in a reflection can become stale and need to be refreshed. The refresh of a reflection causes two updates:

- The data stored in the Apache Iceberg table for the reflection is updated.

- The metadata that stores details about the reflection is updated.

Both of these updates are implied in the term "reflection refresh".

Dremio does not refresh the data that external reflections are mapped to.

Types of Reflection Refresh

How reflections are refreshed depend on the format of the base table.

Types of Refresh for Reflections on Apache Iceberg Tables and on Certain Types of Datasets in Filesystem Sources, Glue Sources, and Hive Sources

There are two methods that can be used to refresh reflections that are defined either on Iceberg tables or on these types of datasets in filesystem, Glue, and Hive sources:

- Parquet datasets in Filesystem sources (on S3, Azure Storage, Google Cloud Storage, or HDFS)

- Parquet datasets, Avro datasets, or non-transactional ORC datasets on Glue or Hive (Hive 2 or Hive 3) sources

Iceberg tables in all supported file-system sources (Amazon S3, Azure Storage, Google Cloud Storage, and HDFS) and non-file-system sources (AWS Glue, Hive, and Nessie) can be refreshed with either of these methods.

Incremental refreshes

There are two types of incremental refreshes:

- Incremental refreshes when changes to an anchor table are only append operations

- Incremental refreshes when changes to an anchor table include non-append operations

- Whether an incremental refresh can be performed depends on the outcome of an algorithm.

- The initial refresh of a reflection is always a full refresh.

Incremental refreshes when changes to an anchor table are only append operations

Optimize operations on Iceberg tables are also supported for this type of incremental refresh.

This type of incremental refresh is used only when the changes to the anchor table are appends and do not include updates or deletes. There are two cases to consider:

-

When a reflection is defined on one anchor table

When a reflection is defined on an anchor table or on a view that is defined on one anchor table, an incremental refresh is based on the differences between the current snapshot of the anchor table and the snapshot at the time of the last refresh.

-

When a reflection is defined on a view that joins two or more anchor tables

When a reflection is defined on a view that joins two or more anchor tables, whether an incremental refresh can be performed depends on how many anchor tables have changed since the last refresh of the reflection:

- If just one of the anchor tables has changed since the last refresh, an incremental refresh can be performed. It is based on the differences between the current snapshot of the one changed anchor table and the snapshot at the time of the last refresh.

- If two or more tables have been refreshed since the last refresh, then a full refresh is used to refresh the reflection.

Incremental refreshes when changes to an anchor table include non-append operations

For Iceberg tables, this type of incremental refresh is used when the changes are DML operations that delete or modify the data (UPDATE, DELETE, etc.) made either through the Copy-on-Write (COW) or the Merge-on-Read (MOR) storage mechanism. For more information about COW and MOR, see Row-Level Changes on the Lakehouse: Copy-On-Write vs. Merge-On-Read in Apache Iceberg.

For sources in filesystems or Glue, non-append operations can include, for example:

- In filesystem sources, files being deleted from Parquet datasets

- In Glue sources, DML-equivalent operations being performed on Parquet datasets, Avro datasets, or non-transactional ORC datasets

Both the anchor table and the reflection must be partitioned, and the partition transforms that they use must be compatible.

There are two cases to consider:

-

When a reflection is defined on one anchor table

When a reflection is defined on an anchor table or one a view that is defined on one anchor table, an incremental refresh is based on Iceberg metadata that is used to identify modified partitions and to restrict the scope of the refresh to only those partitions.

-

When a reflection is defined on a view that joins two or more anchor tables

When a reflection is defined on a view that joins two or more anchor tables, whether an incremental refresh can be performed depends on how many anchor tables have changed since the last refresh of the reflection:

- If just one of the anchor tables has changed since the last refresh, an incremental refresh can be performed. It is based on Iceberg metadata that is used to identify modified partitions and to restrict the scope of the refresh to only those partitions.

- If two or more tables have been refreshed since the last refresh, then a full refresh is used to refresh the reflection.

Dremio uses Iceberg tables to store metadata for filesystem and Glue sources.

For information about partitioning reflections and applying partition transforms, see the section Horizontally Partition Reflections that Have Many Rows in Best Practices for Creating Raw and Aggregation Reflections.

For information about partitioning reflections in ways that are compatible with the partitioning of anchor tables, see Partition Reflections to Allow for Partition-Based Incremental Refreshes in Best Practices for Creating Raw and Aggregation Reflections.

Full refreshes

In a full refresh, a reflection is dropped, recreated, and loaded.

- Whether a full refresh is performed depends on the outcome of an algorithm.

- The initial refresh of a reflection is always a full refresh.

Algorithm for Determining Whether an Incremental or a Full Refresh is Used

The following algorithm determines which refresh method is used:

- If the reflection has never been refreshed, then a full refresh is performed.

- If the reflection is created from a view that uses nested group-bys, joins other than inner or cross joins, unions, or window functions, then a full refresh is performed.

- If the reflection is created from a view that joins two or more anchor tables and more than one anchor table has changed since the previous refresh, then a full refresh is performed.

- If the reflection is based on a view and the changed anchor table is used multiple times in that view, then a full refresh is performed.

- If the changes to the anchor table are only appends, then an incremental refresh based on table snapshots is performed.

- If the changes to the anchor table include non-append operations, then the compatibility of the partitions of the anchor table and the partitions of the reflection is checked:

- If the partitions of the anchor table and the partitions of the reflection are not compatible, or if either the anchor table or the reflection is not partitioned, then a full refresh is performed.

- If the partition scheme of the anchor table has been changed since the last refresh to be incompatible with the partitioning scheme of a reflection, and if changes have occurred to data belonging to a prior partition scheme or the new partition scheme, then a full refresh is performed. To avoid a full refresh when these two conditions hold, update the partition scheme for reflection to match the partition scheme for the table. You do so in the Advanced reflection editor or through the ALTER DATASET SQL command.

- If the partitions of the anchor table and the partitions of the reflection are compatible, then an incremental refresh is performed.

Because this algorithm is used to determine which type of refresh to perform, you do not select a type of refresh for reflections in the settings of the anchor table.

However, no data is read in the REFRESH REFLECTION job for reflections that are dependent only on Iceberg, Parquet, Avro, non-transactional ORC datasets, or other reflections and that have no new data since the last refresh based on the table snapshots. Instead, a "no-op" reflection refresh is planned and a materialization is created, eliminating redundancy and minimizing the cost of a full or incremental reflection refresh.

Type of Refresh for Reflections on Delta Lake tables

Only full refreshes are supported. In a full refresh, the reflection being refreshed is dropped, recreated, and loaded.

Types of Refresh for Reflections on all other tables

-

Incremental refreshes

Dremio appends data to the existing data for a reflection. Incremental refreshes are faster than full refreshes for large reflections, and are appropriate for reflections that are defined on tables that are not partitioned.

There are two ways in which Dremio can identify new records:

- For directory datasets in file-based data sources like S3 and HDFS: Dremio can automatically identify new files in the directory that were added after the prior refresh.

- For all other datasets (such as datasets in relational or NoSQL databases): An administrator specifies a strictly monotonically increasing field, such as an auto-incrementing key, that must be of type BigInt, Int, Timestamp, Date, Varchar, Float, Double, or Decimal. This allows Dremio to find and fetch the records that have been created since the last time the acceleration was incrementally refreshed.

cautionUse incremental refreshes only for reflections that are based on tables and views that are appended to. If records can be updated or deleted in a table or view, use full refreshes for the reflections that are based on that table or view.

-

Full refreshes

In a full refresh, the reflection being refreshed is dropped, recreated, and loaded.

Full refreshes are always used in these three cases:

- A reflection is partitioned on one or more fields.

- A reflection is created on a table that was promoted from a file, rather than from a folder, or is created on a view that is based on such a table.

- A reflection is created from a view that uses nested group-bys, joins, unions, or window functions.

Best practice: Time reflection refreshes to occur after metadata refreshes of tables

Time your refresh reflections to occur only after the metadata for their underlying tables is refreshed. Otherwise, reflection refreshes do not include data from any files that were added to a table since the last metadata refresh, if any files were added.

For example, suppose a data source that is promoted to a table consists of 10,000 files, and that the metadata refresh for the table is set to happen every three hours. Subsequently, reflections are created from views on that table, and the refresh of reflections on the table is set to occur every hour.

Now, one thousand files are added to the table. Before the next metadata refresh, the reflections are refreshed twice, yet the refreshes do not add data from those one thousand files. Only on the third refresh of the reflections does data from those files get added to the reflections.

Setting the Refresh Policy for Reflections

In the settings for a data source, you specify the refresh policy for refreshes of all reflections that are on the tables in that data source. The default policy is period-based, with one hour between each refresh. If you select a schedule policy, the default is every day at 8:00 a.m. (UTC).

In the settings for a table that is not in the Iceberg or Delta Lake format, you can specify the type of refresh to use for all reflections that are ultimately derived from the table. The default refresh type is Full refresh.

For tables in all supported table formats, you can specify a refresh policy for reflection refreshes that overrides the policy specified in the settings for the table's data source. The default policy is the schedule set at the source of the table.

Types of Refresh Policies

Datasets and sources can set reflections to refresh according to the following policy types:

| Refresh policy type | Description |

|---|---|

| Never | Reflections are not refreshed. |

| Period (default) | Reflections refresh at the specified number of hours, days, or weeks. The default refresh period is one hour. |

| Schedule | Reflections refresh at a specific time on the specified days of the week, in UTC. The default is every day at 8:00 a.m. (UTC). |

| Auto refresh when Iceberg table data changes | Reflections automatically refresh for underlying Iceberg tables whenever new updates occur. Reflections under this policy type are known as Live Reflections. Live Reflections are also updated based on the minimum refresh frequency defined by the source-level policy. This refresh policy is only available for data sources that support the Iceberg table format. |

Procedures for Setting and Editing the Refresh Policy

To set the refresh policy on a data source:

- Right-click a data lake or external source.

- Select Edit Details.

- In the sidebar of the Edit Source window, select Reflection Refresh.

- When you are done making your selections, click Save. Your changes go into effect immediately.

To edit the refresh policy on a table:

- Locate the table.

- Hover over the row in which it appears and click

to the right.

to the right. - In the sidebar of the Dataset Settings window, click Reflection Refresh.

- When you are done making your selections, click Save. Your changes go into effect immediately.

Manually Triggering a Refresh

You can click a button to start the refresh of all of the reflections that are defined on a table or on views derived from that table.

- Locate the table.

- Hover over the row in which it appears and click

to the right.

to the right. - In the sidebar of the Dataset Settings window, click Reflection Refresh.

- Click Refresh Now. The message "All dependent reflections will be refreshed." appears at the top of the screen.

- Click Save.

Viewing the Refresh History for Reflections

You can find out whether a refresh job for a reflection has run, and how many times refresh jobs for a reflection have been run.

Procedure

- Go to the space that lists the table or view from which the reflection was created.

- Hover over the row for the table or view.

- In the Actions field, click

.

. - In the sidebar of the Dataset Settings window, select Reflections.

- Click History in the heading for the reflection.

Result

The Jobs page is opened with the ID of the reflection in the search box, and only jobs related to that ID are listed.

When a reflection is refreshed, Dremio runs a single job with two steps:

- The first step writes the query results as a materialization to the distributed acceleration storage by running a REFRESH REFLECTION command.

- The second step registers the materialization table and its metadata with the catalog so that the query optimizer can find the reflection's definition and structure.

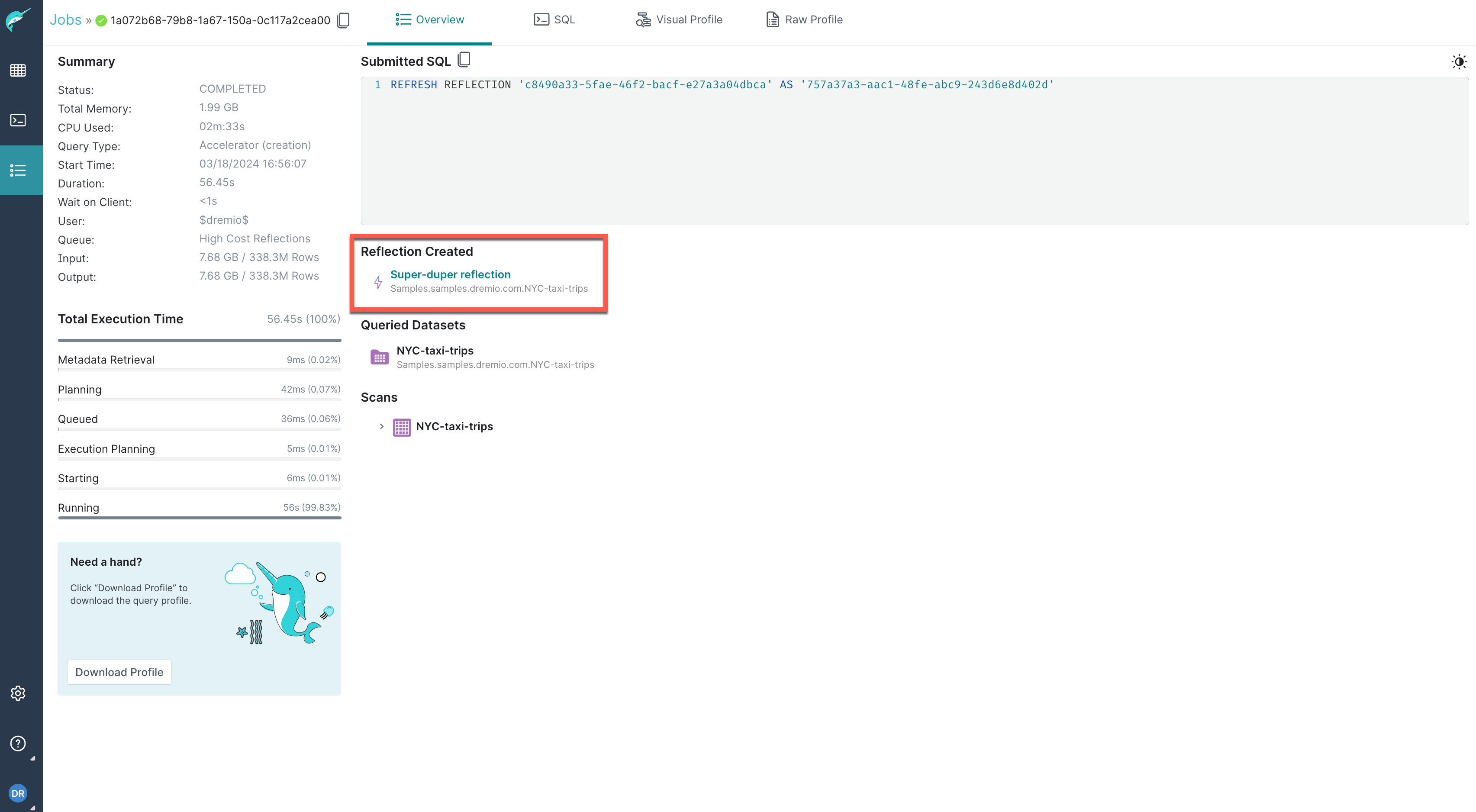

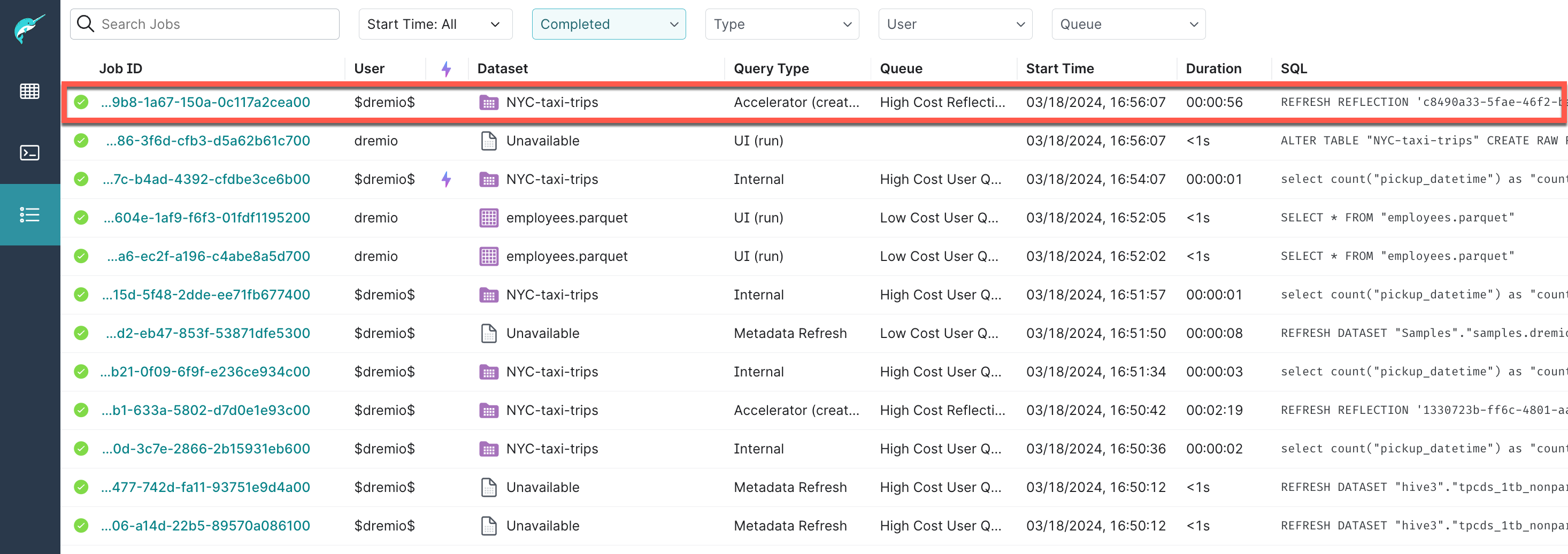

The following screenshot shows the REFRESH REFLECTION command used to refresh the reflection named Super-duper reflection:

The reflection refresh is listed as a single job on the Jobs page, as shown in the example below:

To find out which type of refresh was performed:

- Click the ID of the job that ran the REFRESH REFLECTION command.

- Click the Raw Profile tab.

- Click the Planning tab.

- Scroll down to the Refresh Decision section.

Retry Policy for Reflection Refreshes

When a reflection refresh job fails, Dremio retries the refresh according to a uniform policy. Dremio's retry policy focuses resources on newly failed reflections and helps keep reflection refresh jobs moving through an engine so that reflection data does not become too stale.

After a refresh failure, Dremio's default is to repeat the refresh attempt at exponential intervals up to 4 hours: 1 minute, 2 minutes, 5 minutes, 15 minutes, 30 minutes, 1 hour, 2 hours, and 4 hours. Then, Dremio continues trying to refresh the reflection every 4 hours. After 24 retries (approximately 71 hours and 52 minutes), Dremio stops retrying the refresh.

To configure a different maximum number of retry attempts for reflection refreshes than Dremio's default of 24 retries:

- Click

in the left navbar.

in the left navbar. - Select Reflections in the left sidebar.

- On the Reflections page, click

in the top-right corner and select Acceleration Settings.

in the top-right corner and select Acceleration Settings. - In the field next to Maximum attempts for reflection job failures, specify the maximum number of retries.

- Click Save. The change goes into effect immediately.

Dremio applies the retry policy after a refresh failure for all types of reflection refreshes, no matter whether the refresh was triggered manually in the Dremio console or by an API request, an SQL command, or a refresh policy.

Querying Which Reflections Will Be Refreshed

Queries use the sys.reflection_lineage('<reflection_id>') function to return a list of the reflections that will also be refreshed if a refresh is triggered for a particular reflection. For more information about which reflections are listed, see Triggering Refreshes by Using the Reflection API, the Catalog API, or an SQL Command.

SELECT *

FROM TABLE(sys.reflection_lineage('<reflection_id>'))

<reflection_id> String

The ID of the reflection. If you need to find a reflection ID, see Obtaining Reflection IDs.

Example Query

SELECT * FROM TABLE(sys.reflection_lineage('ebb8350d-9906-4345-ba19-7ad1da2a3cab'));

batch_number reflection_id reflection_name dataset_name

0 4a33df7c-be91-4c78-ba20-9cafc98a4a1d R_P3_web_sales "@dremio"."P3_web_sales"

1 82c32002-5040-4c2a-9430-36320b47b038 R_V6 s3."store_sales"."V6"

2 2f039cd7-8bf7-468e-8d76-d9bf949d94db R_V11 "@dremio"."V11"

2 3e9b03b8-6964-45b6-bab5-534088cfcff1 R_V10 hive."catalog_sales"."V10"

The records returned consist of these fields:

| Column | Data Type | Description |

|---|---|---|

| batch_number | integer | For the reflection that will also be refreshed if the reflection in the query is refreshed, the depth from its upstream base tables is indicated in the Dependency Graph. If a reflection depends on other reflections, it has a larger batch number and is refreshed later than the reflections on which it depends. Reflections with the same batch number do not depend on each other, and their refreshes occur simultaneously in parallel. |

| reflection_id | varchar | The ID of the reflection that will also be refreshed if the reflection in the query is refreshed. |

| reflection_name | varchar | The name of the reflection that will also be refreshed if the reflection in the query is refreshed. |

| dataset_name | varchar | The path and name of the dataset that the reflection is on. |

Triggering Refreshes by Using the Reflection API, the Catalog API, or an SQL Command

You can refresh reflections by using the Reflection API, the Catalog API, and the SQL commands ALTER TABLE and ALTER VIEW.

- With the Reflection API, you specify the ID of a reflection. See Refreshing a Reflection.

- With the Catalog API, you specify the ID of a table or view that the reflections are defined on. See Refreshing the Reflections on a Table and Refreshing the Reflections on a View.

- With the

ALTER TABLEandALTER VIEWcommands, you specify the path and name of the table or view that the reflections are defined on.

The refresh action follows this logic for the Reflection API:

-

If the reflection is defined on a view, the action refreshes all reflections that are defined on the tables and on downstream/dependent views that the anchor view is itself defined on.

-

If the reflection is defined on a table, the action refreshes the reflections that are defined on the table and all reflections that are defined on the downstream/dependent views of the anchor table.

The refresh action follows similar logic for the Catalog API and the SQL commands:

-

If the action is started on a view, it refreshes all reflections that are defined on the tables and on downstream/dependent views that the view is itself defined on.

-

If the action is started on a table, it refreshes the reflections that are defined on the table and all reflections that are defined on the downstream/dependent views of the anchor table.

For example, suppose that you had the following tables and views, with reflections R1 through R5 defined on them:

View2(R5)

/ \

View1(R3) Table3(R4)

/ \

Table1(R1) Table2(R2)

- Refreshing reflection R5 through the API also refreshes R1, R2, R3, and R4.

- Refreshing reflection R4 through the API also refreshes R5.

- Refreshing reflection R3 through the API also refreshes R1, R2, and R5.

- Refreshing reflection R2 through the API also refreshes R3 and R5.

- Refreshing reflection R1 through the API also refreshes R3 and R5.

Refreshing reflections with the Reflection API, the Catalog API on views, and the SQL commands ALTER TABLE and ALTER VIEW is supported by Enterprise Edition only.

Routing Refresh Jobs to Particular Queues

You can use an SQL command to route jobs for refreshing reflections directly to specified queues. See Reflections in the SQL reference.