Customizing Kubernetes Deployments

This documentation is for Dremio 24.3.x. For the latest deployment documentation, please see Deploy Dremio.

This topic provides information about customizing or modifying the default configuration for deploying Dremio in a Kubernetes environment.

Dremio Enterprise Edition

If you are deploying with Dremio Enterprise Edition, do the following:

- Contact Dremio to obtain read-access to the Dremio EE image (a dockerhub ID is needed). The Dremio EE image is not public.

- Create a Dremio-specific Kubernetes secret. Follow the instructions in Specifying ImagePullSecrets on a Pod.

For example, to create a secret in Kubernetes, specify the following parameters:

Properties for Kubernetes secretkubectl create secret docker-registry dremio-docker-secret --docker-username=your_username --docker-password=your_password_for_username --docker-email=DOCKER_EMAIL

Pods can only reference image pull secrets in their own namespace, so this process needs to be done on the namespace where Dremio is being deployed.

- Modify the following properties in values.yaml file:

image: Dremio EE image name.imagePullSecrets: Dremio-specific Kubernetes secret.

Cluster Configuration

Dremio provides a default cluster configuration that is deployed via the provided helm charts. To change the cluster configuration, modify the properties in the values.yaml file.

In the following default Dremio cluster configuration, each Dremio main coordinator, secondary-coordinator, and executor are a pod.

| Pod Type | Pod Count | Memory/CPU | Persistent Volume Size/Usage | Description |

|---|---|---|---|---|

| main coordinator | 1 | 16GB 8 cores | 100Gi: Persistent volume with metadata. | The main coordinator pod manages the metadata. |

| Dremio admin | 1 | n/a | 0: No persistent volume. | The admin pod is used to run offline Dremio admin commands. To accomplish this, it uses the main coordinator's persistent volume and metadata. Typically, this pod is not running. |

| secondary-coordinator | 0 | 16GB 8 cores | 0: No persistent volume. | The secondary-coordinator pod manages query planning, Dremio UI/REST requests, and ODBC/JDBC client requests. |

| executor | 3 | 16GB 4 cores | 100Gi: Persistent volume with Reflections, spilling, results, and upload data. | The executor pod is responsible for query execution. |

| zookeeper | 3 | 1024KB 0.5 cores | 10Gi | The zookeeper pod manages coordination between the Dremio pods. |

Load Balancer

Load balancing distributes the workload from Dremio's web (UI and REST) client and ODBC/JDBC clients. All web and ODBC/JDBC clients connect to a single endpoint (load balancer) rather than directly to an individual pod. These connections are then distributed across available coordinator (main coordinator and secondary-coordinator) pods.

Recommendation: Load balancing is recommended when more than one coordinator pod (main coordinator and secondary-coordinator) is implemented.

If your LoadBalancer supports session affinity, it is recommended that you enable the sessionAffinity property.

Specifically, if you plan to configure two or more (2+) coodinator pods (main and secondary-coordinator),

you must have the Load Balancer service and session affinity property enabled.

See the values.yaml section for more information.

The following table shows which configurations require a load balancer configured and sessionAffinity enabled:

| main coordinator Pod | Secondary-coordinator Pod | Load Balancer Session Affinity |

|---|---|---|

| 1 | 0 | No |

| 1 | 1+ | Yes |

values.yaml

The values.yaml file is used to set the Dremio image, Dremio pods, and load balancing properties.

| Property | Description | Default |

|---|---|---|

| image | Specifies the image containing the Dremio Enterprise. If your image is Dremio Enterprise Edition, you must provide the Kubernetes secret for Dremio with the imagePullSecretes property. | dremio/dremio-oss (Community Edition). |

| coordinator | Specifies the configuration (memory, cpu, count, and volume size) for coordinator pods. | See the values.yaml file for current values. |

| executor | Specifies the configuration (memory, cpu, count, and volume size) for Dremio's executor pods. | See the values.yaml file for current values. |

| zookeeper | Specifies the configuration (memory, cpu, count, and volume size) for Dremio Zookeeper pods. | See the values.yaml file for current values. |

| serviceType | Specifies the load balancer service. | LoadBalancer |

| sessionAffinity | Specifies your load balancer session affinity. If you have configured more than one (1+) secondary-coodinators, this property must be enabled. | ClientIP |

| storageClass | Specifies a custom storage class. If you want to use a value other than the default, uncomment this value and provide the custom storage class. At this time, the only custom value available is managed-premium for Azure AKS. | The default persistent storage supported by the Kubernetes platform. |

| imagePullSecrets | Specifies the authorization required for Kubernetes to pull Dremio Enterprise Edition images. This property is used in conjunction with the image property. | n/a |

Config Directory

The files in the config directory are used for the following:

- Dremio configuration and environment properties

- Dremio Logs

- Deployment-specific files

Each file in the config directory provides defaults that can be used out-of-the-box, however, if you want to customize Dremio, do the following:

- Review and modify the files.

- Add deployment-specific files (for example, core-site.xml) by copying your file(s) to this directory.

Any customizations to your Dremio environment are propagated to all the pods when installing or upgrading the deployment.

| File | Description |

|---|---|

dremio.conf | Used to specify various options related to node roles, metadata storage, distributed cache storage and more. See Configuration Overview if you want to customize your Dremio environment. |

dremio-env | Used for setting Java options and log directories. See Configuration Overview if you want to customize your Dremio environment. |

logback-access.xml | Used to control the log access. |

logback.xml | Used to control the log levels. |

Custom Authentication

By default, the helm charts enable local user authentication. Dremio Enterprise supports multiple authentication methods as described here.

To configure AD/LDAP follow the below steps:

- Follow the AD/LDAP setup instructions to prepare the ad.json file.

- Place the ad.json file in the config folder of helm charts

- Add the following lines in at the end in dremio.conf located in the config folder:

services.coordinator.web.auth.type: "ldap"

services.coordinator.web.auth.config: "ad.json"

The services.coordinator.web.auth.config configuration property replaces services.coordinator.web.auth.ldap_config, which is deprecated.

- Run the upgrade command if dremio is already running or the install helm command for the changes to take effect.

To configure Azure Active Directory follow the below steps:

- Follow the AAD setup instructions to prepare the azuread.json file.

- Place the azuread.json file in the config folder of helm charts

- Add the following lines in at the end in dremio.conf located in the config folder:

services.coordinator.web.auth.type: "azuread"

services.coordinator.web.auth.config: "azuread.json"

- Run the upgrade command if dremio is already running or the install helm command for the changes to take effect.

To configure SSO with OpenID follow the below steps:

- Follow the OpenID setup instructions to prepare the oauth.json file.

- Place the oauth.json file in the config folder of helm charts

- Add the following lines in at the end in dremio.conf located in the config folder:

services.coordinator.web.auth.type: "oauth"

services.coordinator.web.auth.config: "oauth.json"

- Run the upgrade command if dremio is already running or the install helm command for the changes to take effect.

Changing the memory and CPU cores

The default memory and CPU values for executors and coordinators in the helm charts are 122GB and 15 cores. The CPU cores and memory allocated to the pods can be changed from the values.yaml directly in the coordinator and executor sections:

coordinator:

# CPU & Memory

# Memory allocated to each coordinator, expressed in MB.

# CPU allocated to each coordinator, expressed in CPU cores.

cpu: 15

memory: 122800

executor:

# CPU & Memory

# Memory allocated to each executor, expressed in MB.

# CPU allocated to each executor, expressed in CPU cores.

cpu: 15

memory: 122800

In some cases, Dremio support will recommend a different value for heap and direct. This can be achieved by editing the templates/dremio-master.yaml, templates/dremio-executor.yaml and changing the following lines:

- name: DREMIO_MAX_HEAP_MEMORY_SIZE_MB

value: "{{ template "dremio.coordinator.heapMemory" $ }}"

- name: DREMIO_MAX_DIRECT_MEMORY_SIZE_MB

value: "{{ template "dremio.coordinator.directMemory" $ }}"

To a value recommended by Dremio support like below:

- name: DREMIO_MAX_HEAP_MEMORY_SIZE_MB

value: "16000"

- name: DREMIO_MAX_DIRECT_MEMORY_SIZE_MB

value: "120000"

Enabling TLS

Dremio Enterprise supports encrypting the web ui and client connectivity. This section shows how to do that.

Get the private key and certificate in pem format and then enable TLS for different protocols as shown below:

For Web UI

Run the following command to create the secret:

kubectl create secret tls dremio-tls-secret-ui --key privkey.pem --cert cert.pem

And modify the line that has enabled: false to enabled: true in the below section of values.yaml. It should look like below:

# Web UI

web:

port: 9047

tls:

# To enable TLS for the web UI, set the enabled flag to true and provide

# the appropriate Kubernetes TLS secret.

enabled: true

# To create a TLS secret, use the following command:

# kubectl create secret tls ${TLS_SECRET_NAME} --key ${KEY_FILE} --cert ${CERT_FILE}

secret: dremio-tls-secret-ui

For odbc/jdbc client connectivity (Legacy)

Run the following command to create the secret:

kubectl create secret tls dremio-tls-secret-client --key privkey.pem --cert cert.pem

And modify the line that has enabled: false to enabled: true in the below section of values.yaml. It should look like below:

# ODBC/JDBC Client

client:

port: 31010

tls:

# To enable TLS for the client endpoints, set the enabled flag to

# true and provide the appropriate Kubernetes TLS secret. Client

# endpoint encryption is available only on Dremio Enterprise

# Edition and should not be enabled otherwise.

enabled: true

# To create a TLS secret, use the following command:

# kubectl create secret tls ${TLS_SECRET_NAME} --key ${KEY_FILE} --cert ${CERT_FILE}

secret: dremio-tls-secret-client

For odbc/jdbc and flight client connectivity

Run the following command to create the secret:

kubectl create secret tls dremio-tls-secret-flight --key privkey.pem --cert cert.pem

And modify the line that has enabled: false to enabled: true in the below section of values.yaml. It should look like below:

# Flight Client

flight:

port: 32010

tls:

# To enable TLS for the Flight endpoints, set the enabled flag to

# true and provide the appropriate Kubernetes TLS secret.

enabled: false

# To create a TLS secret, use the following command:

# kubectl create secret tls ${TLS_SECRET_NAME} --key ${KEY_FILE} --cert ${CERT_FILE}

secret: dremio-tls-secret-flight

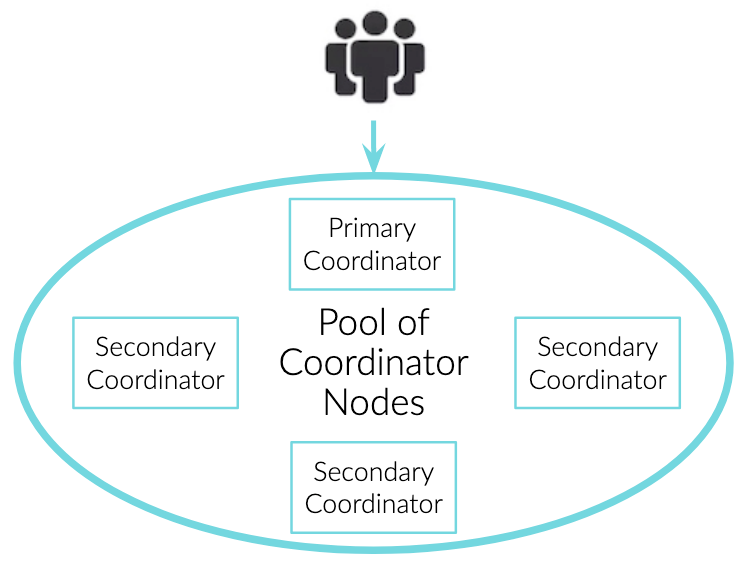

Scale-out coordinators

Dremio supports scaling coordinators for high concurrency use cases. Scale-out coordinator nodes enable users to deploy multiple coordinator nodes to support the number of users and increase the number of queries that can be processed by a single Dremio system. With multiple coordinator nodes Dremio can scale to support thousands of users simultaneously.

By default, the helm charts deploy one primary coordinator. If you want to scale the number of coordinators during deployment, change the count in the coordinator section of the values.yaml to a number greater than 0. If you want to scale this after deployment, you can run the following kubectl command:

kubectl scale statefulsets dremio-coordinator --replicas=<number>

When multiple coordinators are deployed, the load balancer service automatically routes the incoming queries to an available coordinator in round-robin method.

Scaling executors and configuring engines

Specify the number of executors you need by changing the count in the following section of values.yaml:

# Engines

# Engine names be 47 characters or less and be lowercase alphanumber characters or '-'.

# Note: The number of executor pods will be the length of the array below * count.

engines: ["default"]

count: 3

You can also add multiple engines for different use cases and utilize Dremio's workload management to isolate workloads. This feature is available in Enterprise edition only.

# Engines

# Engine names be 47 characters or less and be lowercase alphanumber characters or '-'.

# Note: The number of executor pods will be the length of the array below * count.

engines: ["datascience","adhoc"]

count: 3

In the above example, 3 executors will be created for each of the engine names in the engines array. You can also specify different number of executors for each of the engine by uncommenting the below lines in values.yaml and creating a copy for each of the engines. For example, if we want 5 executors for datascience it can be done like below:

engineOverride:

datascience:

cpu: 15

memory: 122800

count: 5

annotations: {}

podAnnotations: {}

labels: {}

podLabels: {}

nodeSelector: {}

tolerations: []

serviceAccount: ""

extraStartParams: >-

-DsomeCustomKey=someCustomValue

extraInitContainers: |

- name: extra-init-container

image: {{ $.Values.image }}:{{ $.Values.imageTag }}

command: ["echo", "Hello World"]

extraVolumes: []

extraVolumeMounts: []

volumeSize: 50Gi

storageClass: managed-premium

volumeClaimName: dremio-default-executor-volume

cloudCache:

enabled: true

storageClass: ""

volumes:

- name: "default-c3"

size: 100Gi

storageClass: ""

Its also possible to isolate each of the statefulsets (coordinators, executors) to use a different node pools (AKS node pools,EKS node groups). This can be done by uncommenting the following line:

#nodeSelector: {}

And specifying the node group name:

nodeSelector:

agentpool: executorpool

Its a best practice to have Dremio pods run in user node pool as the system node pools serve the primary purpose of hosting critical system pods such as CoreDNS and metrics-server. User node pools serve the primary purpose of hosting your application pods.

Storage

Dremio stores different kinds of data for various purposes. The following table gives an overview:

| Component | Location | What's stored? |

|---|---|---|

| Dremio metadata | Dremio main coordinator PVC (default: 128GB) | Information about spaces, view definitions, source configuration, local users |

| Table metadata | Distributed storage - S3/Azure Storage/GCS | Apache Iceberg metadata about tables |

| Reflection | Distributed storage - S3/Azure Storage/GCS | Materialization of tables and views stored as iceberg tables |

| Logs | Default: STDOUT | Logs are sent to STDOUT, can be configured to be stored in PVC |

| Columnar cache | PVC attached to executors (default: 100GB) | Temporary data stored when reading parquet files from the lake |

| Spill | PVC attached to executors (default: 128GB) | Temporary data stored when operators run out of memory |

Persisting logs to PVC

Logs are piped to STDOUT in the default configuration. To persist logs to the local PVC of coordinator and executors, add the following lines in config/dremio-env from:

DREMIO_LOG_TO_CONSOLE=0

DREMIO_LOG_DIR="/opt/dremio/data"

And upgrade the helm chart. The logs are then saved to /opt/dremio/data. To access these logs, SSH into the pods.

Sizing the PVCs

Columnar cache

If you are seeing a lot of cache misses in the query profile (Go to a job > Click on raw profile > Click on the operator called "TABLE FUNCTION" > there will be section called "Operator Metrics" and under that you can see the cache hits and cache misses columns) you can adjust the size of the columnar cache PVC by editing the following lines in values.yaml (default is 100Gi):

cloudCache:

enabled: true

# Uncomment this value to use a different storage class for C3.

#storageClass:

# Volumes to use for C3, specify multiple volumes if there are more than one local

# NVMe disk that you would like to use for C3.

#

# The below example shows all valid options that can be provided for a volume.

# volumes:

# - name: "dremio-default-c3"

# size: 100Gi

# storageClass: "local-nvme"

volumes:

- size: 250Gi

Spill

When running a query, if there is not enough memory available to complete an SQL operation (like aggregation, sorting, joins) then Dremio can spill those operations to disk to complete the query. For complex queries that requires more memory, its possible that the spill disk will run out of space. When this happens you can increase the spill disk size. This is the size of the PVC configured for executors in the following section of values.yaml (default is 128Gi):

executor:

# CPU & Memory

# Memory allocated to each executor, expressed in MB.

# CPU allocated to each executor, expressed in CPU cores.

cpu: 15

memory: 122800

# Engines

# Engine names be 47 characters or less and be lowercase alphanumber characters or '-'.

# Note: The number of executor pods will be the length of the array below * count.

engines: ["default"]

count: 3

# Executor volume size.

volumeSize: 250Gi

Larger disk sizes also improves the performance as the IOPS are directly proportional to the size of the disks in Azure, AWS and GCP.