Amazon EKS

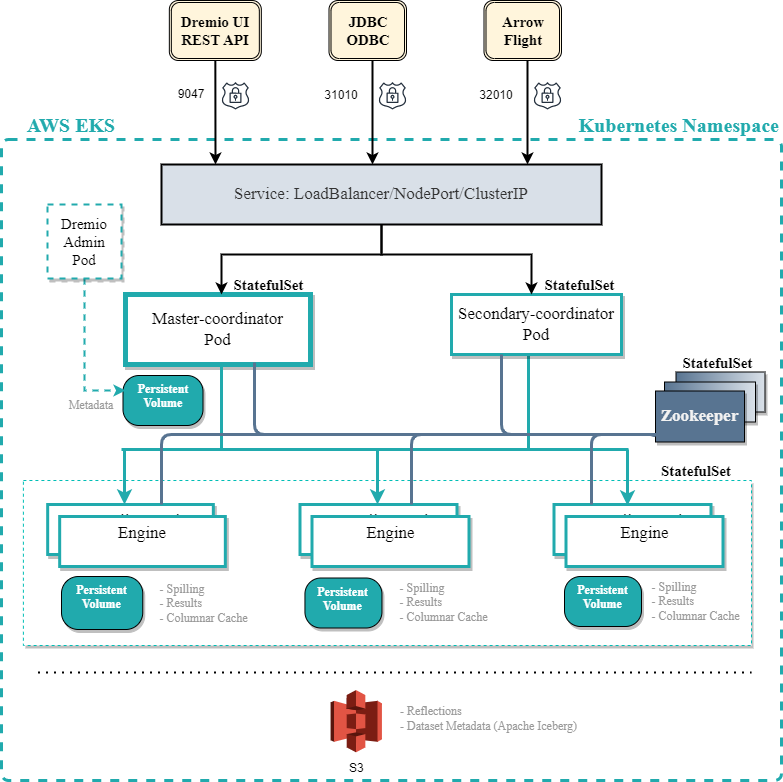

This topic describes the deployment architecture of Dremio on Amazon Elastic Container Service for Kubernetes (EKS).

Architecture

Requirements

Before setting up an EKS cluster, make sure you meet these requirements:

-

Use AWS EKS version 1.12.7 or later.

-

Use r5d.4xlarge (16 core, 128 GiB memory, 2 x 300 NVMe) as the minimum worker node instance type.

Read the following subsections for further requirements.

Kubernetes

Dremio requires regular updates to your Kubernetes version in EKS to stay on an officially supported version (see Understand the Kubernetes version lifecycle on EKS for more information). Falling behind on your Kubernetes version can increase the total cost of ownership as AWS will charge the user for supporting an outdated version.

Make sure you meet these requirements:

- The version of Kubernetes must be one from the list under the section Available versions on standard support.

- Cgroups v2 must be enabled. You can check it by running the command

stat -fc %T /sys/fs/cgroup/on a Dremio pod, which should returncgroup2fs. Failing to do so may result in unexpected restarts of Dremio.

Helm Charts

Dremio requires regular updates to the latest version of the official Dremio Helm charts. Dremio does not support the use of Helm charts published by third parties.

It is highly recommended to check in the Dremio Helm chart configuration (typically done in the values.yaml file), as well as the rest of the Helm chart, into a code repository (i.e. Git).

Dremio Docker Image

Dremio requires using the official Dremio Docker image. Variations to this need to be approved with Dremio directly before usage.

Dremio does not support loading and running other applications inside the Dremio image. Separate applications must run in their specific containers, as they may interfere with the behavior of the Dremio application.

Container Registry

Dremio recommends pushing any required Docker images (Dremio Enterprise Edition, Zookeeper, Nessie) to a private container registry, e.g., Amazon Elastic Container Registry, because Dremio does not provide any SLAs for its quay.io and docker.io repositories.

EKS Cluster Configuration

Before setting up an EKS cluster, make sure you have read and understood the configuration recommendations described in these sections:

- Add-Ons

- Node Sizes

- Disk Storage Class

- Distributed Storage

- Network

- Load Balancer

- Monitoring

- Logging

- Backup

Add-Ons

The following add-ons are required for EKS Clusters:

- Amazon VPC CNI

- Amazon EBS CSI Driver

- Amazon EKS Pod Identity Agent

- CoreDNS

- Kube-proxy

Node Sizes

Regarding node sizes, the amount of CPU and memory allocated to Dremio will always be less than the EKS node size, as Kubernetes, the system pods, and the OS will also require resources. For this reason, we subtract 2 from the total available CPUs and subtract 10-20 GB of the theoretical node memory when allocating resources to the Dremio pods.

Learn more about the instance types in Amazon EC2 M5 Instances.

Dremio recommends having separate EKS node groups for coordinators and executors to allow each node group to autoscale independently:

-

Coordinator

For coordinators, Dremio recommends at least 32 CPUs and 64 GB of memory (e.g., a c6i.8xlarge instance is a good option). In the Helm charts, this amount would result in 30 CPUs and 58 GB of memory allocated to the Dremio pod.

For further information on recommended JVM Garbage Collection (GC) parameters, see G1GC settings for the Dremio JVM.

If the workload increases beyond the limits of a single coordinator, Dremio requires adding scale-out coordinators. For more information on when and how to scale coordinators, read this PDF on Evaluating Coordinator Scaling.

To facilitate resource scaling, Dremio recommends setting the coordinator node group in EKS to

autoscale. -

Executor

For executors, Dremio recommends using 16 CPUs and 128 GB of memory (e.g., a r5d.4xlarge instance is a good option). In the Helm charts, this amount results in 14 CPUs and 110 GB of memory allocated to the Dremio pod.

The minimum node group size should be set to 1, and the maximum should be the number of nodes defined in the contract. Nodes per engine can be set in the

values.yamlfile. -

ZooKeeper

For ZooKeeper pods, Dremio recommends 3 small nodes (e.g., m5d.xlarge nodes with 2 cores and 8 GB of memory). These pods only require 1 CPU and 2 GB of memory, and they are configured to run on separate nodes for resiliency against individual node failures.

Disk Storage Class

Dremio recommends local and fast NVMe (or SSD) storage for C3 and to spill on executors on EKS cluster deployments. The storage type should be GP3 or IO2 for all nodes. Learn more about disk storage class in Create a Storage Class.

Storage size requirements are:

- Coordinator volume: 512 GB (for KV store) of type GP3 or IO2.

- Executor volume #1 (results, spilling, and C3): minimum of 300 GB.

- Executor volume #2 (Columnar Cloud Cache): minimum of 300 GB.

- ZooKeeper volume: 16 GB.

Dremio explicitly does not recommend using EFS storage (NFS or otherwise) for the coordinator volume, as the IO performance has shown to be insufficient for Dremio’s KV store when the disk is not local. If cross-availability zone storage is required, then classed EBS storage should be configured for multi-availability zones. You are required to do performance testing.

For executors, if the underlying node has at least 300 GB of local SSD/NVMe storage included (r5d.4xlarge has 2 NVMe disks of 300GB each and m5d.8xlarge has 2 NVMe disks of 600GB), we recommend using these disks as “local storage” for better performance for C3 and spilling. This storage requires an additional Helm chart and EKS configuration.

Distributed Storage

Dremio requires Amazon S3 Storage to be configured as distributed storage on EKS. To learn more about distributed storage, please refer to Configuring Distributed Storage.

Network

The network bandwidth must be 10 Gbps or higher.

Load Balancer

Load balancing distributes the workload from Dremio's web client (UI and REST) and ODBC/JDBC clients. All web and ODBC/JDBC clients connect to a single endpoint (load balancer) rather than directly to an individual pod. These connections are then distributed across available coordinator (main coordinator and secondary-coordinator) pods.

Although the provided Helm charts include a basic load balancer server configuration, it is strongly recommended to operate the load balancer independently (e.g., via Nginx Helm charts or a load balancer provided by the AWS platform).

Monitoring

Dremio highly recommends setting up (or connecting to an existing) monitoring stack, e.g., Prometheus and Grafana. Monitoring the cluster’s resource usage, such as heap and direct memory, CPU, and disk I/O, over time is a key pillar of the Dremio’s software shared responsibility model. Monitoring ensures long-term stability as the system scales to more usage. Additionally, monitoring is required for Horizontal Pod Autoscaling.

For a detailed setup tutorial and an overview of which metrics to track, see Dremio Monitoring in Kubernetes.

Logging

Dremio highly recommends setting writeLogsToFile to true in the Helm charts for both coordinators and executors, so Dremio writes logs to a file and Dremio cluster admins can easily download log packages from the Dremio console (see Retrieving Logs from the Dremio Console).

Backup

Dremio highly recommends scheduling regular backups of Dremio’s KV Store either fully automated (version 25.1+) or as a cronjob. Dremio also recommends regularly testing the internal backup restore process by performing a Dremio cluster restore every 6 to 12 months.

The Dremio Support team has observed cases where the backups were scheduled regularly, but the backups were not properly stored and, in case of disaster, it’s impossible to restore the environment based on the SLAs defined by users.

Setting up an EKS Cluster

To set up a Kubernetes cluster on Elastic Kubernetes Service (EKS):

-

Create an EKS cluster (see Create your Amazon EKS cluster for more information).

-

Make sure to create a node group with an instance type that has 16CPU and 128GB of memory (r5d.4xlarge is recommended).

-

The number of allocated nodes in the EKS cluster must be equivalent to the number of Dremio executors plus one (1) for the Dremio main coordinator.

-

-

Connect to the cluster (see Connect kubectl to an EKS cluster by creating a kubeconfig file for more information).

-

Install Helm (see Installing Helm in EKS for more information).

With your EKS Cluster setup, read the next section about Dremio Console Settings and then proceed to Deploying Dremio.

Dremio Console Settings

Read these Dremio UI settings before deploying Dremio.

Workload Management

Dremio recommends keeping engine sizes to a maximum of 10 executor pods (with the standard 16 CPUs and 128 GB of memory) to avoid over-parallelization of queries.

Dremio recommends splitting workloads into high and low-cost queries, as well as setting up dedicated workload queues for Reflections, metadata refresh, and table optimization jobs. For further information, see Dremio's Well-Architected Framework.

Support Keys

Dremio support keys must only be used when advised to do so by Dremio Support, as they can fundamentally alter the way the application is behaving, and improper usage can lead to unexpected failures.

Deploying Dremio

To deploy Dremio on EKS, follow the steps in Installing Dremio on Kubernetes in the dremio-cloud-tools repository on GitHub.

High Availability

High availability is dependent on the Kubernetes infrastructure. If any of the Kubernetes pods go down for any reason, Kubernetes brings up another pod to replace the pod that is out of commission.

-

The Dremio main coordinator and secondary-coordinator pods are each StatefulSet. If the main coordinator pod goes down, it recovers with the associated persistent volume and Dremio metadata preserved.

-

The Dremio executor pods are a StatefulSet with an associated persistent volume. Secondary-coordinator pods do not have a persistent volume. If an executor pod goes down, it recovers with the associated persistent volume and data preserved.

Load Balancing

Load balancing distributes the workload from Dremio's web client (UI and REST) and ODBC/JDBC clients. All web and ODBC/JDBC clients connect to a single endpoint (load balancer) rather than directly to an individual pod. These connections are then distributed across available coordinator (main coordinator and secondary-coordinator) pods.